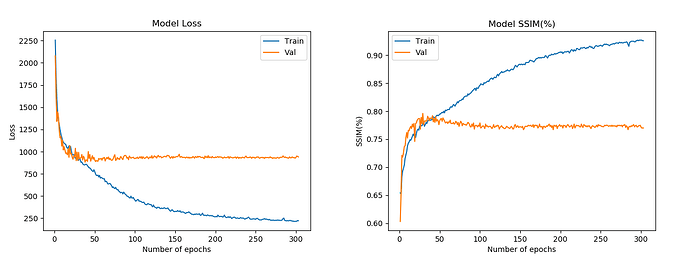

Usually when a model overfits, validation loss goes up and training loss goes down from the point of overfitting. But for my case, training loss still goes down but validation loss stays at same level. Hence validation accuracy also stays at same level but training accuracy goes up. I am trying to reconstruct a 2D image from a 3D volume using UNet. Same is the behavior when I am trying to reconstruct 3D volume from 2D image but at higher loss and lower accuracy. Can someone explain the curve that why validation loss is not going down from the point of overfitting?

It might depend how the model performance and loss are calculated. E.g. if your model outputs the same class for all pixels during validation without significantly changing its confidence, the loss and performance might just get stuck.