I’m trying to convert a pyTorch pre-trained model (hopenet) to mxnet.

Hopenet is a pose estimator deep learning model based on resnet50. Pretrained model and hopenet implementation can be found here: GitHub - natanielruiz/deep-head-pose: Deep Learning Head Pose Estimation using PyTorch.

To achieve the conversion I developed the following piece of code (python):

import os

import time

import cv2

import numpy as np

import torch

from torch.autograd import Variable

import torch.onnx

from torchvision import transforms

import torch.backends.cudnn as cudnn

import torchvision

import torch.nn.functional as F

from PIL import Image

import mxnet as mx

from mxnet.contrib import onnx as onnx_mxnet

from pytesseract import image_to_string

import mxnet as mx

from mxnet.contrib import onnx as onnx_mxnet

import numpy as np

import hopenet

import utils

param_snapshot = r"C:\Users\cesar.gouveia\Projects\deep-head-pose\hopenet_alpha2.pkl"

param_save_onnx_complete_model_file_path = r"C:\Users\cesar.gouveia\Projects\deep-head-pose\deep_head_pose.onnx"

# ResNet50 structure

model = hopenet.Hopenet(torchvision.models.resnet.Bottleneck, [3, 4, 6, 3], 66)

print('Loading snapshot.')

# Load snapshot

saved_state_dict = torch.load(param_snapshot, map_location="cpu")

model.load_state_dict(saved_state_dict)

model.train(False)

torch.save(model, param_save_torch_complete_model_file_path)

input_shape = (3, 224, 224)

dummy_input = Variable(torch.randn(1, *input_shape))

torch.onnx.export(model, dummy_input, param_save_onnx_complete_model_file_path)

sym, arg, aux = onnx_mxnet.import_model(param_save_onnx_complete_model_file_path)

mx.model.save_checkpoint(os.path.join(r"C:\Workspace", 'model_mxnet'), 0, sym, arg, aux)

Basically the code loads a pyTorch pre-trained model, exports the following model to onnx and then imports the onnx model and tries to convert it to mxnet. The code is based on this tutorial on how to convert pytorch to mxnet (PyTorch to ONNX to MXNet Tutorial - Deep Learning AMI).

The issue happens when it tries to import the onnx model using mxnet:

C:\Users\cesar.gouveia\Anaconda3\envs\hopenet\lib\site-packages\torch\nn\functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at ..\c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

Traceback (most recent call last):

File "C:/Users/cesar.gouveia/Projects/deep-head-pose/test_on_video.py", line 53, in <module>

sym, arg, aux = onnx_mxnet.import_model(param_save_onnx_complete_model_file_path)

File "C:\Users\cesar.gouveia\Anaconda3\envs\hopenet\lib\site-packages\mxnet\contrib\onnx\onnx2mx\import_model.py", line 59, in import_model

sym, arg_params, aux_params = graph.from_onnx(model_proto.graph)

File "C:\Users\cesar.gouveia\Anaconda3\envs\hopenet\lib\site-packages\mxnet\contrib\onnx\onnx2mx\import_onnx.py", line 114, in from_onnx

inputs = [self._nodes[i] for i in node.input]

File "C:\Users\cesar.gouveia\Anaconda3\envs\hopenet\lib\site-packages\mxnet\contrib\onnx\onnx2mx\import_onnx.py", line 114, in <listcomp>

inputs = [self._nodes[i] for i in node.input] KeyError: '513'

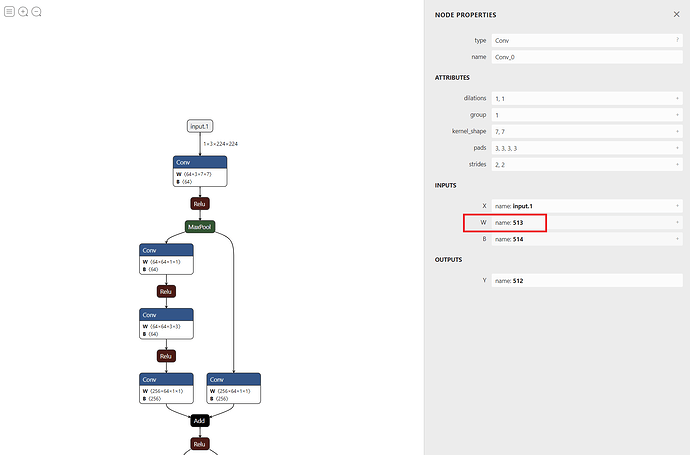

Tried to google about other people with the same problem but no success. Also tried to use netron to see which node was failing, and apparently it has something to do with the first convolution? in the convolution weights?

Has anyone experienced this type of error? Probably I’m doing something wrong because it is very unlikely that there is no conversion compatibility for a resnet50 from pytorch to mxnet.

Thanks, César.