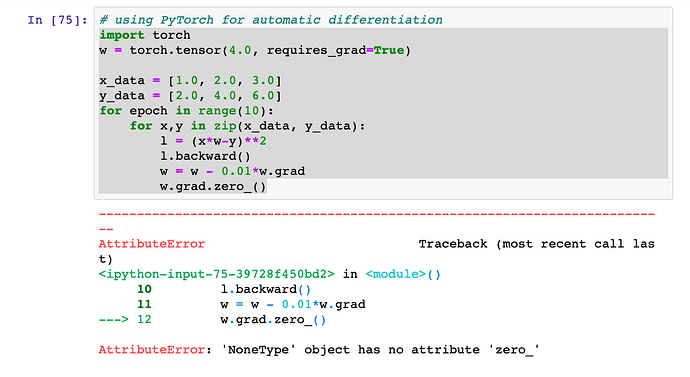

I am having a trouble with understanding the workings of .backward() and .grad() in PyTorch. Particularly, w.grad seems to become None type in the following code. Could you help to clarify what’s going on here. I am using PyTorch version 1.0.0. Thanks.

Not sure what’s going on there, but your issue is probably fixed if you change x_data to

x_data = torch.tensor([1., 2., 3.])

and the same for y_data.

l.backward()

with torch.no_grad():

w = w - 0.01*w.grad

w.requires_grad = True