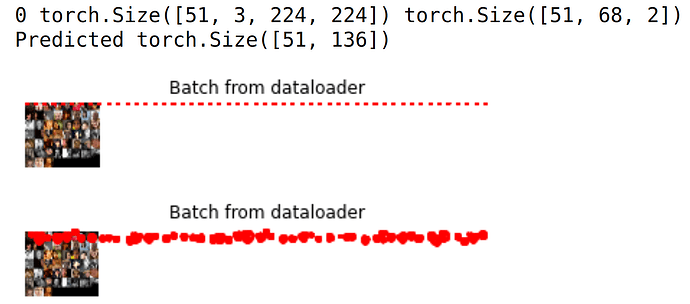

Running this evaluation code, yields very weird results. I understand that I only have 69 images in faces directory but aren’t these results very weird?

# Test the model

model.eval() # eval mode (batchnorm uses moving mean/variance instead of mini-batch mean/variance)

with torch.no_grad():

correct = 0

total = 0

for i, sample_batched in enumerate(train_loader):

print(i, sample_batched['image'].size(),

sample_batched['landmarks'].size())

images_batch, landmarks_batch = \

sample_batched['image'], sample_batched['landmarks']

images = images_batch

labels = landmarks_batch.reshape(-1, 68 * 2)

images = Variable(images.float())

labels = Variable(labels)

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

#_, predicted = torch.max(outputs.data, 1)

#_, predicted = outputs.data

print("Predicted", outputs.data.shape)

outputs = outputs.cpu()

images = images.cpu()

if i_batch == 3:

plt.figure()

show_landmarks_batch({'image': images, 'landmarks': outputs.data.reshape(-1, 68, 2) })

plt.axis('off')

plt.ioff()

plt.show()

show_landmarks_batch({'image': images, 'landmarks': labels.reshape(-1, 68, 2) })

plt.axis('off')

plt.ioff()

plt.show()

break

(base) mona@mona:~/research/facial_landmark$ ls faces/*.jpg | wc -l

69

I also have to mention I have a very terrible test loss:

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 15511.6732

--------------------------------------------------

Epoch: 1 Train Loss: 15590.3896 Test Loss: 15511.6732

--------------------------------------------------

Minimum Test Loss of 15511.6732 at epoch 1/10

Model Saved

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 16363.6969

--------------------------------------------------

Epoch: 2 Train Loss: 15584.9707 Test Loss: 16363.6969

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 16458.4072

--------------------------------------------------

Epoch: 3 Train Loss: 15594.6885 Test Loss: 16458.4072

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 15063.5689

--------------------------------------------------

Epoch: 4 Train Loss: 16293.1094 Test Loss: 15063.5689

--------------------------------------------------

Minimum Test Loss of 15063.5689 at epoch 4/10

Model Saved

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 16673.0292

--------------------------------------------------

Epoch: 5 Train Loss: 14949.3047 Test Loss: 16673.0292

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 15699.0407

--------------------------------------------------

Epoch: 6 Train Loss: 16076.5234 Test Loss: 15699.0407

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 15512.4619

--------------------------------------------------

Epoch: 7 Train Loss: 15775.4736 Test Loss: 15512.4619

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 16625.0978

--------------------------------------------------

Epoch: 8 Train Loss: 14958.9971 Test Loss: 16625.0978

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 15330.6030

--------------------------------------------------

Epoch: 9 Train Loss: 15395.0596 Test Loss: 15330.6030

--------------------------------------------------

size of train loader is: 1

type(loss_train_step) is: <class 'torch.Tensor'>

loss_train_step.dtype is: torch.float32

Test Steps: 1/1 Loss: 15949.3246

--------------------------------------------------

Epoch: 10 Train Loss: 15716.1689 Test Loss: 15949.3246

--------------------------------------------------

Training Complete

Total Elapsed Time : 19.73250699043274 s

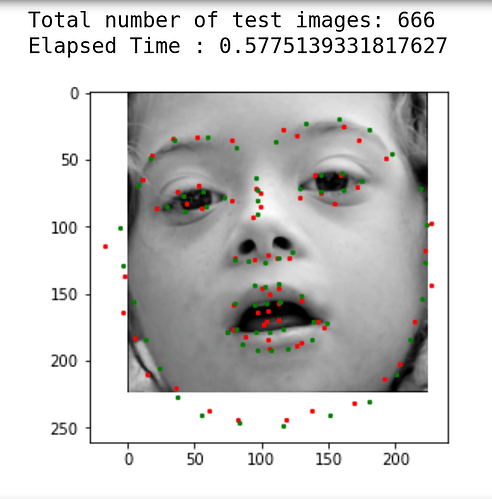

This is how the evaluation results look in the original file here: Google Colab