I’m converting a DataParallel (DP) model into DistributedDataParallel (DDP).

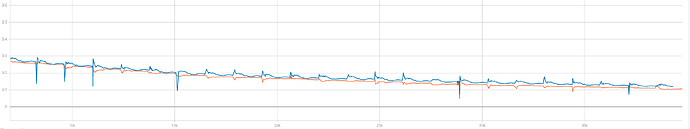

However, the DDP degradates the testing performance and leads to zigzag loss curves during training.

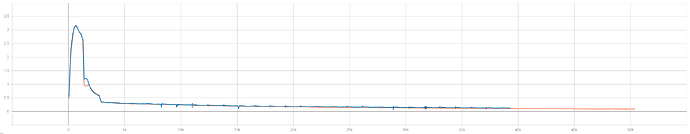

Below is the loss curve during training (blue is DDP and orange is DP):

In general these two behave similar. But when zooming in, the DDP presents periodic zigzags:

My training configurations are:

-

DP:

- batch size 48

- 3 GPUs

-

DDP

- batchsize per GPU: 16

- 3 GPUs

Random seeds are fixed and learning rate and all other configurations are the same.