first i’m a Deep learning newbee and i’m not a english speaker,so if i say something strange please just tell me. i’m using LSTM to make a prediction about time series. When this problem occurs, I also search about it in many places and find some possible reasons, such as normalization. However, these solutions dont work at all . so I came to here for advice.

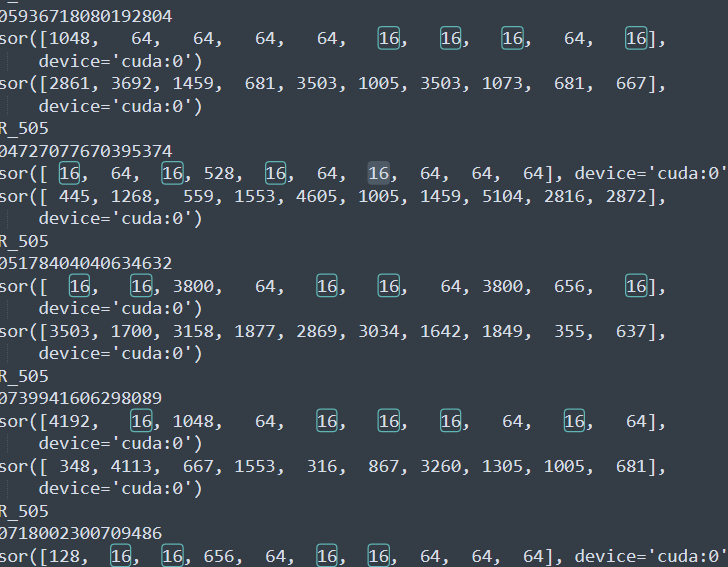

Problem symptoms: a constant appears frequently in prediction. If it ran more epochs, all prediction could be the same. This constant may change with the modification of the model parameters, but it will not change without modification of the parameters; in a single training, a constant will start to have a large frequency, then it will change to other constants with the training and remain unchanged at last.

General process: first, label the problem classification number and convert the data in the data set to the format of label sequence, then divide the sequence into ten sliding windows (v0 to V9 to predict V10; V1 to V10 to predict V11 and so on), put it into LSTM, embed it with torch.nn.embedding, then process it with softmax, output it, send it to cross entropy function to calculate loss . finally use the output and real value to Post back propagation.

Maybe there are some problems in terms and expressions. Please forgive me and give me some advice.