Hello smani -

First, could you please post text rather than screenshots?

(It makes things searchable, lets screen readers work, etc.)

If you need to post an equation that you can’t format adequately

using the forum’s markdown, you could post a screenshot of

that, but do then refer to and describe the equation in your text.

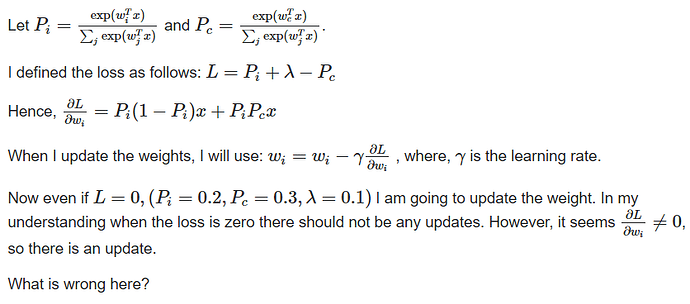

I haven’t verified your analytic computation of the gradient;

I’ll take your word for it.

But I think the issue is the following:

You say “In my understanding when the loss is zero there should

not be any updates.” In general, this isn’t correct. It’s true that we

often work with loss functions that are non-negative (never less

than zero), and often “loss = 0” implies “true solution found.” But

the value of the loss function has no particular meaning in the

pytorch framework. It is just something you minimize to train

your network. Nothing prevents your loss function from having

a minimum of less than zero, and nothing prevents pytorch from

finding that minimum.

In gradient descent, the (negative of the) gradient tells you the

direction to move in parameter space to make your loss smaller.

(That is, algebraically smaller – less positive, which is the same

as more negative.)

Your example illustrates this general point. Your loss function

has the constant lambda in it. But the locations of any minima

of your loss function don’t depend on lambda, the gradient is

independent of lambda, and the optimization algorithm

(presumably gradient descent) doesn’t care about lambda.

If your loss function happens to be zero for some particular

value of lambda, training won’t stop, nor should it – the fact

that your loss was zero was merely an artifact of that particular

value of lambda.

Good luck.

K. Frank