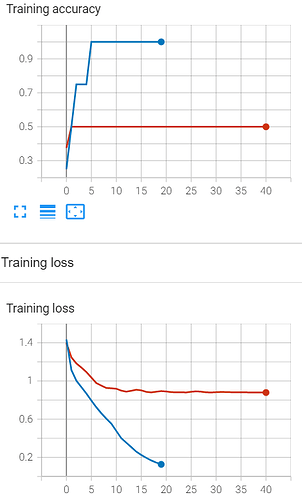

I am trying to train RNN model to classify sentences into 4 classes, but it doesn’t seem to work. I tried to overfit 4 examples (blue line) which worked, but even as little as 8 examples (red line) is not working, let alone the whole dataset.

I tried different learning rates and sizes of hidden_size but it doesn’t seem to help, what am I missing? I know that if the model is not able to overfit small batch it means the capacity should be increased but in this case increasing capacity has no effect.

The architecture is as follows:

class RNN(nn.Module):

def __init__(self, input_size=1, hidden_size=256, num_classes=4):

super().__init__()

self.rnn = nn.RNN(input_size, hidden_size, batch_first=True)

self.fc = nn.Linear(hidden_size, num_classes)

def forward(self, x):

#x=[batch_size, sequence_length]

x = x.unsqueeze(-1) #x=[batch_size, sequence_length, 1]

_, h_n = self.rnn(x) #h_n=[1, batch_size, hidden_size]

h_n = h_n.squeeze(0)

out = self.fc(h_n) #out=[batch_size, num_classes]

return out