When i trained a neural network ,

code:

class linear_regression(nn.Module):

def init(self):

super().init()

self.weight = nn.Parameter(torch.rand(1,

requires_grad = True,

dtype=torch.float))

self.bias = nn.Parameter(torch.rand(1,

requires_grad=True,

dtype=torch.float))

in dtype i give dtype=float instead of torch.float.

when i trained it didn’t showed any error instead it accurately predicts the value rather than torch.float. why pytorch showing this behavior?

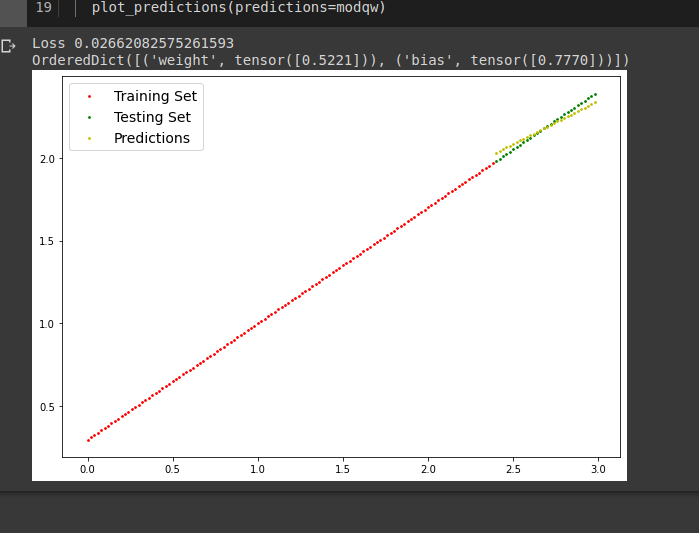

predicting value with dtype=torch.float

I don’t fully understand the issue, but note that Python’s float is a C double and PyTorch will thus use torch.float64 or torch.double as seen here:

weight = nn.Parameter(torch.rand(1, requires_grad = True, dtype=torch.float))

print(weight.dtype)

# torch.float32

weight = nn.Parameter(torch.rand(1, requires_grad = True, dtype=float))

print(weight.dtype)

# torch.float64

it was a logical error i train with wrong model