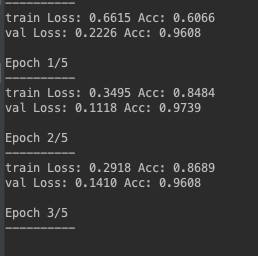

when useing inception3, testing ACC is higher a lot than training ACC, why?

The usage of dropout layers (or other regularization techniques which might be disabled for testing) might sometimes yield this observation.

Due to the random dropping of neurons the model is “smaller” during training, thus have less capacity, which might in turn give a worse training accuracy.

On the other hand, it might also depend on the data (splitting) you are using.

If you just got lucky (or even cherry-picked the validation dataset), this observation is also common.

the model trained on cifar10 datasets. so, it is a standard split of test/train.

Dropout works only while training, while testing they are shut down by Pytorch. This affects the accuracy, is better w/o dropouts - it is better at testing time, which is what you need.