I 'd like to try make some binary version of Conv2d operation for XNOR conv net (and upstream it if succeed) and I do not want to write it from the scratch. I found that in functional.py file there is a reference to _ConvNd = torch._C._functions.ConvNd and I do not not where to go next. Dear pytorch developers could you please share some cuda kernels from the internals of your engine?

Our logic for convolution is a little convoluted. It could go through cudnn, or we can run a matrix multiply to do that (there are also probably other cases). The entry point is here: https://github.com/pytorch/pytorch/blob/master/aten/src/ATen/native/Convolution.cpp

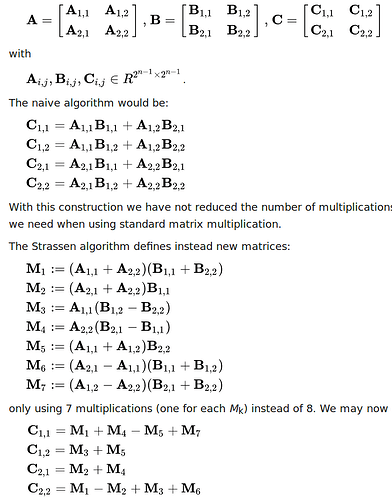

@richard I just now realized that I can not use any of winograd/gemm/FTT algorithms to do XNOR conv2d or matrix multiplication or whatever. This algorithms introduce additional additions, so every time I do for example strassen fast matrix multiplication nested item I come out from {-1, 1} diapason and to bigger one {-2, 0, 2} and so on.

Same stuff for winograd and FFT. So anybody could only use naive student like realization XNOR Conv2d without any karatsuba speedup

check this paper for how they (and others) learn binary kernel weights

Rana, thank you from the deep of my heart I am really interested in binary networks. But my question is about effective binary convolution kernels (Conv2d analog for XNOR net) for fast inference. Do you or your colleagues know what conv2d kernels to use for inference?

In the paper I sent they refer and compare to this work

XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks

https://arxiv.org/abs/1603.05279

Where during inference they perform forward propagation with the binarized weights