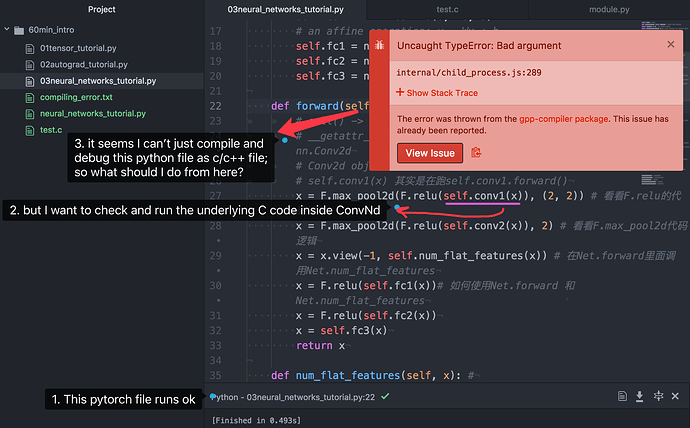

Well, you usually want to debug in C when you have segmentation faults, as you don’t have any information that was returned from C.

If you want to debug from python, you can use pdb.

Note: in most cases you don’t need to go into gdb to debug a python program, because the libraries were designed to give meaningful error messages and not segfault. In the numpy case that I will show below, I will use an unsafe numpy function to obtain the segfault, but that should not happen with normal numpy code. The same applies for pytorch, so if you observe segmentation faults, please let us know

If you want to go into debugging C, you can use gdb for example. Say you have a python script example.py that gives a problem. Here I’ll post an example in numpy, called example.py:

import numpy as np

from numpy.lib.stride_tricks import as_strided

a = np.array([1, 2, 3])

b = as_strided(a, shape=(2,), strides=(20,))

# accessing invalid memory, will segfault

b[1] = 1

If you try running it in python, it will give segmentation fault. Try running python example.py.

To run it on gdb, you can do something like

gdb python

and then, once it enters gdb, you do

run example.py

This will run the program and, when it crashes, it will give you the possibility to inspect where it crashed by running bt (from backtrace). You can find more information on gdb options online.