Hi,

I have an output: (N, seq_len, C) and target: (N, C).

where N is the batch_size and C is the number of classes

It is a multilabel classification problem. Which Loss function should I use?

Let me take a common example where we face this kind of situation.

In sequence classification with models like Bert, a special token (usually called classification token) is added in front of all sequences. And when the model returns the representation of the sequence (of dimension N * seq_len * emb_dim usually), instead of passing it to the classification layer directly to get the output logits of dimension N * seq_len * C (as in your case), we select only the representation of the classification token (of dimension N * emb_dim) to get an output logits of dimension N * C after projection.

But if you want to use an output of dimension N * seq_len * C, the defined loss function will depend on the task you want to achieve. So please give more information about what you want to do, and where the output and target come from.

I am doing an emotion recognition task, where I have images, transcriptions and targets.

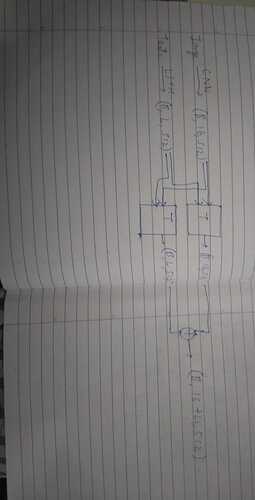

The architecture is as follows:

where T is the cross attention transformer Layer. And since I can get the the value of L(text seq length) only at runtime from the train loader. I don’t know what to do?