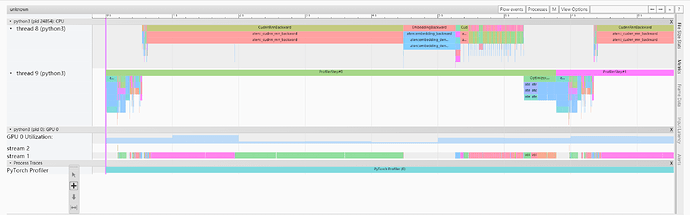

I’m currently debugging a OCR model, it seems that there might be some issue related to CPU threads synchronization. I used pytorch profiler to check that in my CPU process, there are 2 threads, one running the forward ops, optimizer.zero_grad(), and later the optimizer.step() stuff in each iteration; another running the backward ops( when you call loss.backward()).

As my debug goes, I’m thinking if it is possible to only use a single CPU thread to do this since there is no parallelism in my case between these 2 threads, they seem to be running consecutively.

I checked the source code, seems like this is the starting point for backward function:pytorch/torch/csrc/autograd/python_engine.cpp at main · pytorch/pytorch · GitHub, and later called into this function: pytorch/torch/csrc/autograd/engine.cpp at main · pytorch/pytorch · GitHub.

It seems to me that this init_local_ready_queue() function as also documented will create another thread? Should I pass some ready_queue to it? And how?