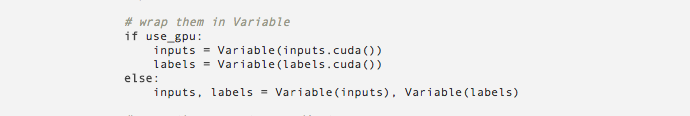

Why do we have this differentiation of Cuda Variables/Tensors versus CPU Variables/Tensors in Pytorch ? It feels like this just makes the code a little longer, as well as prone to errors and hard to maintain. For example, someone could forget to define some variable as a cuda, thus severely bottlenecking all performance henceforth?

For that matter, why wouldn’t it make sense to have a simple wrapper class around Variable which could initialize/propagate computation based on whether or not CUDA is used? In that mode, if there’s a CUDA enabled GPU, the framework uses it. Otherwise it doesn’t !! Wouldn’t that simply a lot of code…?