I wrote a simple bare bones program to check the usage of ram of gpu using pretrained resnet-34 from model zoo. I found that the creating and shifting the model to GPU utilizes about 532 MBs of gpu ram. Loading the model seems to have no effect on ram usage, showing pytorch reserves the ram for subsequent loading of weights. The weight file is only 87.3 MBs on disk. So why is the model taking up 532 mb of GPU ram, even if we count class/function overhead why is it using over 5.5x the space it occupies on the disk? Is there anyway to reduce this usage?

Can you post how do you check the GPU RAM usage?

Probably pytorch just cached the CUDA memory, for future allocation.

Using nvidia-smi. I expanded my script to include output from pytorch own commands for checking memory.

import torch

from torchvision import models

a = models.resnet34(pretrained=False)

a.cuda()

a.load_state_dict(torch.load('./resnet34-333f7ec4.pth'))

a.eval()

print("Max Memory occupied by tensors: ", torch.cuda.max_memory_allocated(device=None))

print("Max Memory Cached: ", torch.cuda.max_memory_cached(device=None))

print("Current Memory occupied by tensors: ", torch.cuda.memory_allocated(device=None))

print("Current Memory cached occupied by tensors: ", torch.cuda.memory_cached(device=None))

input("press any key to continue")

b = torch.rand(1,3,224,224)

b = b.to('cuda')

c = a(b)

print("\n\nMax Memory occupied by tensors: ", torch.cuda.max_memory_allocated(device=None))

print("Max Memory Cached: ", torch.cuda.max_memory_cached(device=None))

print("Current Memory occupied by tensors: ", torch.cuda.memory_allocated(device=None))

print("Current Memory cached occupied by tensors: ", torch.cuda.memory_cached(device=None))

input("Press enter to exit")

Here is the output:

Max Memory occupied by tensors: 87333888

Max Memory Cached: 90570752

Current Memory occupied by tensors: 87333888

Current Memory cached occupied by tensors: 90570752

press any key to continue

Max Memory occupied by tensors: 146021376

Max Memory Cached: 156631040

Current Memory occupied by tensors: 119782400

Current Memory cached occupied by tensors: 156631040

Press enter to exit

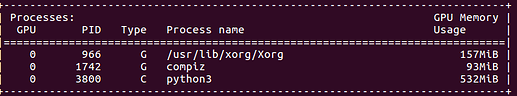

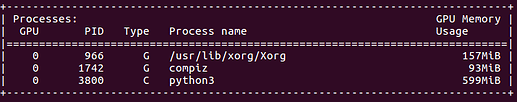

Im also attaching the output from nvidia-smi:

Before passing an input

After passign an input:

There is still a huge discrepancy between max memory cached and the amount of memory occupied as shown by nvidia-smi. Am i using the functions incorrectly?

Pytorch uses some memory for meta-data and CUDA context management, and it’s not exposed to python API yet.

You can try:

a = torch.Tensor(1).cuda()

And then nvidia-smi to see how much extra memory pytorch requires.