Recently, I compiled pytorch according to the tips above GitHub, but I found that the pytorch library generated by compilation will slow down when running convolution operation. Through NSYS, I found that the API duration on the CPU side has become longer. What is the reason and how to solve it.

Could you explain which calls are seeing a slowdown on the CPU and how this would be related to cuDNN, please?

This is my code.

input_size = [128, 128, 112, 112]

conv_param = [128, 256, [3,3], 1, 1, 1, 1, False]

input = torch.randn([128, input_size[1], input_size[2], input_size[3]], device = 'cuda:0')

cnt = 100

net = torch.nn.Conv2d(*conv_param).to('cuda:0')

output = net(input)

start = time.time()

torch.cuda.synchronize()

while cnt > 0:

output = net(input)

cnt -= 1

torch.cuda.synchronize()

end = time.time()

print('Duration is ', end - start)

Compile pytorch and run the above code. The result is 7.132707357406616s

PIP installs pytorch and runs the above code. The result is 4.728105068206787s

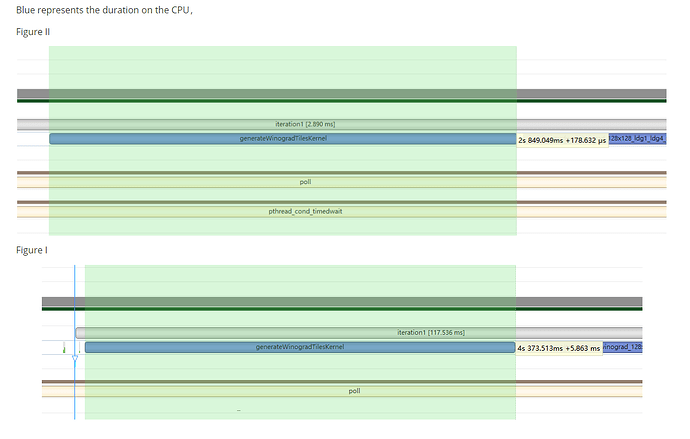

The operation of NSYS (another program, but the problems described are consistent) is shown in the following figure. Figure 1 shows the results generated by compilation, and Figure 2 shows the results generated by direct installation. We can see that the CPU duration of generatewinogradtileskernel in Figure 1 is 5.863 milliseconds. In Figure 2, the CPU duration of generatewinogradtileskernel is 178.632 microseconds.

Which CUDA and cuDNN version are you using in the source build vs. the binary (i.e. which PyTorch version and CUDA runtime did you select for the binaries)?

I would assume that the change in all 3 libs (torch, CUDA, cuDNN) would create different runtimes, but it’s hard to tell where the difference is coming from when all these libs were moved.

Source build:

cuda: 11.2

cudnn: 8201

torch: 1.13.0a0+git7360b53

binary:

cuda: 10.2

cudnn: 7605

torch: 1.10.0+cu102

You could try the latest releases for the source build (CUDA 11.7.0 and cuDNN 8.4) and/or the CUDA11.6 binary which comes with cuDNN 8.3.2 and see how it compares to these old releases.

Thank you for your patient reply. I’ll try what you said.