I’m reading this paper on a DCGAN and the authors write:

For upsampling by the generator, we use strided convolution transpose operations instead of pixel

shuffling [43] or interpolation, as we found this to work better in practice. We set the slope of the

LeakyReLU units to 0.2 and the dropout rate to 0.1 during training. Importantly, we did not normalize

input maps but scaled them down by a constant factor.

They also mention it in the model architecture:

------------------64 GAN------------------

down-scale factor = 100

--Generator--

nz = 100

ConvTranspose2d( 512, 4, 1, 0)

BatchNorm2d(512)

LeakyReLU(0.2),

ConvTranspose2d(256, 4, 2, 1)

BatchNorm2d(256)

LeakyReLU(0.2)

ConvTranspose2d(128, 4, 2, 1)

BatchNorm2d(128)

LeakyReLU(0.2)

ConvTranspose2d(64, 4, 2, 1)

BatchNorm2d(64)

LeakyReLU(0.2)

ConvTranspose2d(1, 4, 2, 1)

Clamp(>0)

Enforce Symmetry

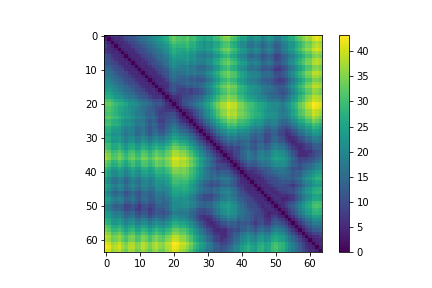

Wouldn’t this mean that the image goes from 64x64 to 6x6? How can the generator/discriminator ever produce images that resemble this if they’re pulling from such a small image?