I want to save time by using the gradients of the last layer to replace that of all layers, however, I find it takes the same time to calculate the gradient of the last layer and all the gradients.

the code for calculating the gradient for the last layer:

last_layer=list(net.children())[-1]

for param in net.parameters():

param.requires_grad = False

for param in last_layer.parameters():

param.requires_grad = True

for i in range(len(inputs)):

optimizer.zero_grad()

loss[i].backward(retain_graph=True)

last_grad_norm=0.0

for name, para in last_layer.named_parameters():

if 'weight' in name:

last_grad_norm += para.grad.norm().cpu().item()

result.append(last_grad_norm)

the code for calculating the gradient for all layers:

for i in range(len(inputs)):

optimizer.zero_grad()

loss[i].backward(retain_graph=True)

all_grad_norm=0.0

for name, para in last_layer.named_parameters():

if 'weight' in name:

all_grad_norm += para.grad.norm().cpu().item()

result.append(all_grad_norm)

I want to know why it cosumes the same time even that I only calculate gradients for partical layers. Meanwhile, I want to know how can I get the gradients for the last layer faster. I will be quite appreciate if someone can help me.

If the input doesn’t require gradients, calculating the gradients for a single layer should be faster than all layers.

You code doesn’t show any profiling so I cannot comment on the correctness of it.

Note that, in case you are using a GPU, CUDA operations are asynchronous, so you would have to synchronize the code before starting and stopping the timer or use the torch.utils.benchmark utility from the nightly binaries.

That being said, you are currently also synchronizing your “production” code by pushing the gradient norm to the CPU and calling item() on it.

Thanks for your reply!

I modify the code and use the time.time() function to record the time

the code is as follows:

last_layer=list(net.children())[-1]

for param in net.parameters():

param.requires_grad = False

for param in last_layer.parameters():

param.requires_grad = True

torch.cuda.synchronize()

start_time=time.time()

for i in range(len(inputs)):

optimizer.zero_grad()

loss[i].backward(retain_graph=True)

last_grad_norm=0.0

for name, para in last_layer.named_parameters():

if 'weight' in name:

last_grad_norm += para.grad.norm().cpu().item()

# print("last grad norm", last_grad_norm)

result.append(last_grad_norm)

torch.cuda.synchronize()

print("the time for computing the gradient for the last layer:",time.time()-start_time)

for param in net.parameters():

param.requires_grad = True

torch.cuda.synchronize()

start_time=time.time()

for i in range(len(inputs)):

optimizer.zero_grad()

loss[i].backward(retain_graph=True)

all_grad_norm=0.0

for name, para in last_layer.named_parameters():

if 'weight' in name:

all_grad_norm += para.grad.norm().cpu().item()

result.append(all_grad_norm)

torch.cuda.synchronize()

print("the time for computing the gradient for all layers:",time.time()-start_time)

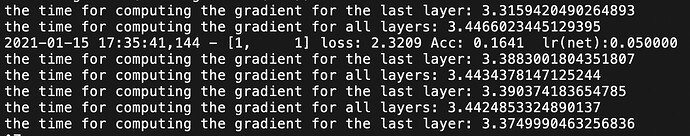

the result is as follows:

The cost time is almost similar.

Besides, because I am a new learner of Pytorch, I don’t know how to compute the gradient of loss about the input of the last layer, which include the softmax,bn and the last layer- conv. Could you give me an example or reference. Thanks so much!