When I run the following code

a = torch.normal(0,1,(2,5000))

b = torch.normal(0,1,(5000,17))

(a@b)[:, :5] - (a@b[:,:5])

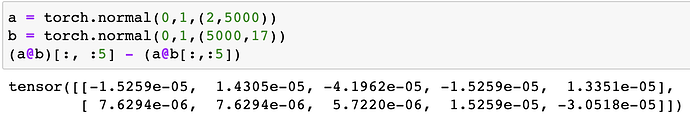

I get the output

In other words, multiplying two matrices and taking a smaller portion of the result isn’t the same as multiplying the first matrix with the corresponding smaller portion of the second matrix, when mathematically it should be the same.

I noticed that for the above example, the errors persist with subsetting different numbers of columns (i.e. other than 5) until 13, at which point the errors disappear.

This is problematic for me because I am trying to implement a version of attention that performs scatter operations to avoid needing padding all input sequences to the same length. Instead of a batched matrix multiply on each individual sequence, I perform a matrix multiply on a matrix containing the sequences concatenated together. But because of this error, I cannot reproduce pytorch’s attention to within the tolerance I desire, (1e-4 max discrepancy between mine and pytorch attention’s outputs with same random inputs/parameters)