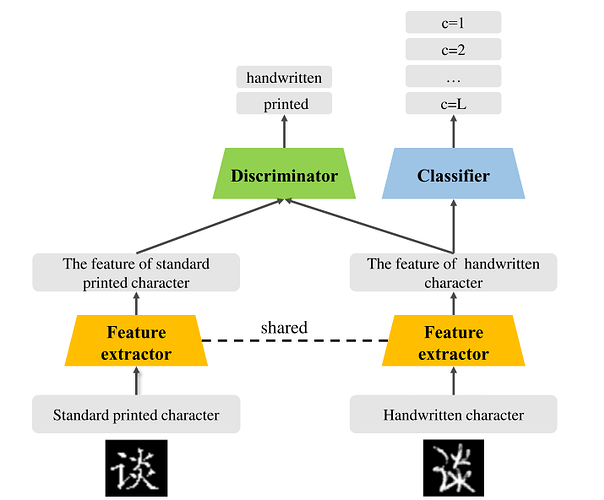

I wanna to recurrent the model of this paper.

And when I train Discriminator,I found that the acc of Feature extractor and Classifier decline because the batchnorm’s parameters changed,but I had set the .requires_grad of them to be False.The optimizer just change the parameters of D.

I found if I use model.eval() to close

BatchNorm2d of Feature extractor and Classifier when I train Discriminator ,the acc does not decline .What’s the mistake of my code,or what’s the mistake of my understand of batchnorm?

d_optimizer = torch.optim.SGD(D.parameters(), lr=LR,momentum=0.9,weight_decay=0.0005)

` #######for discriminator###################

D.zero_grad()

pr_fm=CNN(pr)

p_label=torch.full((batch_size,), 1).to(device).float()

pr_d=D(pr_fm.detach())

pr_d=pr_d.view(pr_d.size(0)).float()

D_prloss = D_loss_func(pr_d, p_label)

D_prloss.backward()

D_PR_1 = pr_d.mean().item()

hw_fm=CNN(hw)

h_label=torch.full((batch_size,), 0).to(device).float()

hw_d=D(hw_fm.detach())

hw_d=hw_d.view(hw_d.size(0)).float()

D_hwloss = D_loss_func(hw_d, h_label)

D_hwloss.backward()

D_HW_1 = hw_d.mean().item()

D_loss=D_prloss+D_hwloss

d_optimizer.step()`