Based on the notebook it seems you are working on an assignment and don’t provide much information here.

If you get stuck at a specific PyTorch-related problem, feel free to add some information to your post.

I’m working on a project which is a part of my Udacity ND…in the project at Ques./ step 3

I have to build a NN from scratch and achieve test accuracy more than 10% but I am getting 0%…I also seeing that my Validation loss is increasing whereas test loss is decreasing…

then at step 4 I have to train my model using transfer learning and achieve test accuracy more than 60% where I could achieve 53% just about 20 epochs so I think increasing epoch can help or tuning learning rate but here also It would be helpful if u suggest…

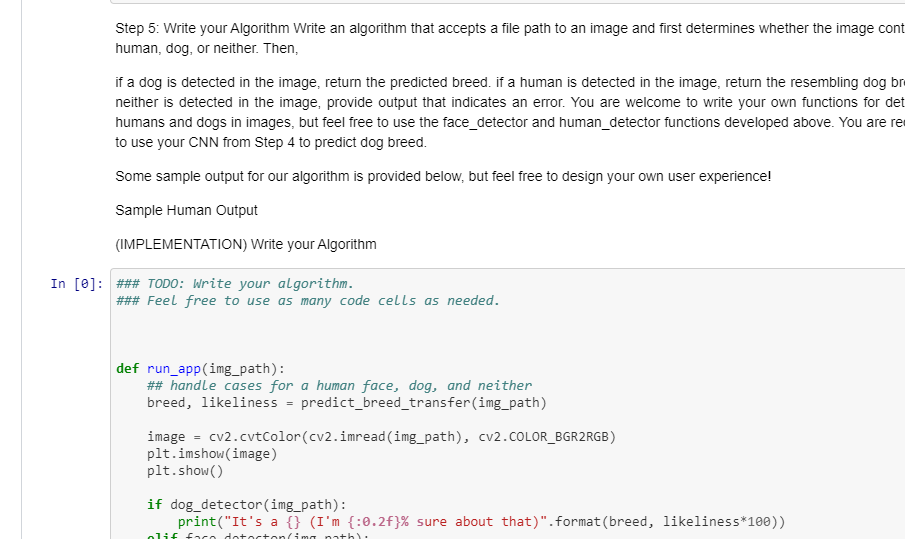

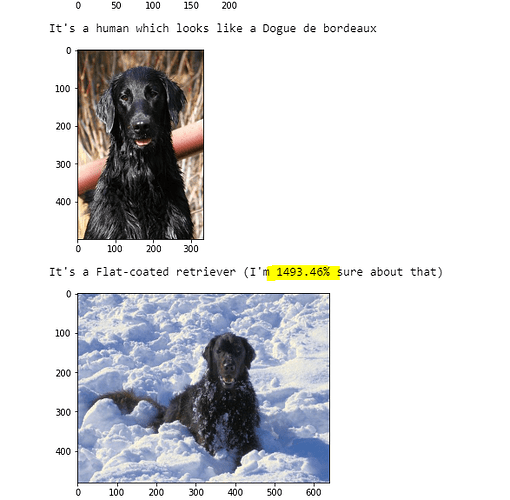

at last…in step 6 I am getting abnormal percentage in the output section

I would recommend to scale down the problem and verify each step of your calculations.

I.e. if your code predicts a 0% accuracy, check some output samples and compare them to the ground truth label. If some are right, your accuracy calculation seems to be wrong.

The same applies for your out of bounds probabilities: print each output separately, calculate the expected result manually and compare it to your formula.

I’m hesitant to help much further, as I believe that you would learn the most by digging into the code and debug it.