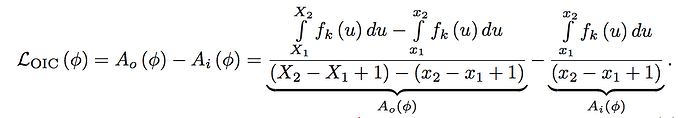

I want to implement a boundary regression model which uses the loss function defined above to update its parameters. Regression values are the output of this model and x1 and x2 can be computed based on the regression values. Since x1 and x2 are used as indexes of a mask to implement this loss function, my question is if I define a loss layer to compute the loss value and then simply call loss.backward(), will the model be updated as expected?

Hi,

Unfortunately, the indexing operation’s gradient is not very informative as it is 0 almost everywhere. So you should not rely on it to learn your boundaries.