Hello,

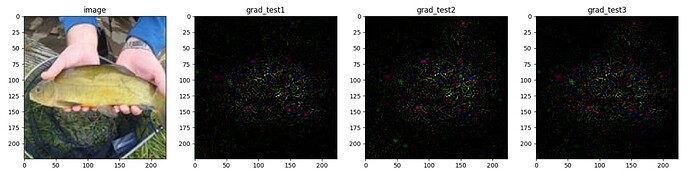

To make a kind of fast gradient sign attack, I try to calculate the gradient of an image against a softmax loss. The problem is that the image is normalized before entering the model, and I want to get the gradient relative to the unnormalized (untouched) image.

I think the theoretical gradient of the normalization transform:

torchvision.transforms.Normalize(mean=mean, std=std)

can be obtained with:

torchvision.transforms.Normalize(mean=0, std=std)

I tried to calculate the gradient in three different ways but I get 3 different results. What am I doing wrong?

The model I use is a model transferred from AlexNet :

n_classes = 10

model = torchvision.models.alexnet(pretrained=False)

model.classifier[6] = torch.nn.Linear(model.classifier[6].in_features, n_classes)

model.load_state_dict(torch.load(path_model, map_location=torch.device('cpu')))

image = PIL.Image.open("U:\\PROJET_3A\\imagenette2-160\\train\\n01440764\\n01440764_17174.JPEG")

transform = torchvision.transforms.Compose([torchvision.transforms.Resize(256),

torchvision.transforms.CenterCrop(224),

torchvision.transforms.ToTensor(),])

image = transform(image)

image = image[None, :]

mean = [0.485, 0.456, 0.406]

std = [0.229, 0.224, 0.225]

normalize = torchvision.transforms.Normalize(mean=mean, std=std)

grad_of_normalization = torchvision.transforms.Normalize(mean=0, std=std)

target_class = 1

test1 = image.clone().detach()

test1.requires_grad = True

vector_scores = self.model(normalize(test1))

loss_target = -torch.nn.functional.log_softmax(vector_scores, dim=1)[0, target_class]

loss_target.backward()

grad_test1 = test1.grad.clone().detach()

test2 = torch.autograd.Variable(normalize(image.clone().detach()), requires_grad=True)

vector_scores = self.model(test2)

loss_target = -torch.nn.functional.log_softmax(vector_scores, dim=1)[0, target_class]

loss_target.backward()

grad_test2 = test2.grad.clone().detach()

grad_test2 = grad_of_normalization(grad_test2)

test3 = normalize(image).clone().detach()

test3.requires_grad = True

vector_scores = self.model(test3)

loss_target = -torch.nn.functional.log_softmax(vector_scores, dim=1)[0, target_class]

loss_target.backward()

grad_test3 = test3.grad.clone().detach()

grad_test3 = grad_of_normalization(grad_test3)