I used Pytorch to create 3D CNN with 2 conv layer

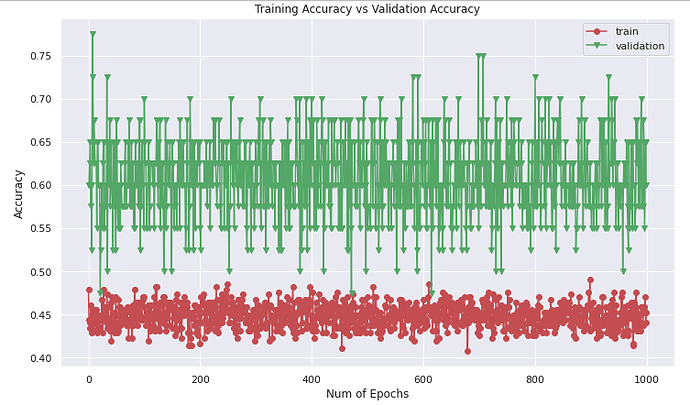

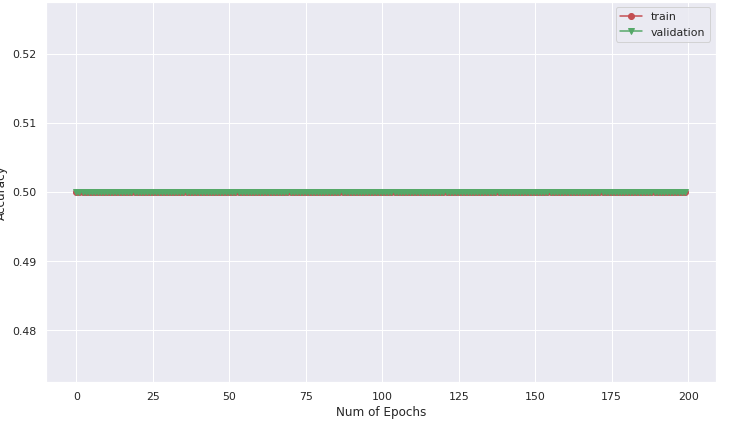

I used 1000 epochs as shown in the curve but the accuracy and the loss values are almost stable.

can you explain the reason to me please ?

class CNNModel(nn.Module):

def __init__(self):

super(CNNModel, self).__init__() # héritage

self.conv_layer1 = self._conv_layer_set(3, 32)

self.conv_layer2 = self._conv_layer_set(32, 64)

self.fc1 = nn.Linear(64*28*28*28, 2)

self.fc2 = nn.Linear(1404928, num_classes)

self.relu = nn.LeakyReLU()

self.batch=nn.BatchNorm1d(2)

self.drop=nn.Dropout(p=0.15, inplace = True)

def _conv_layer_set(self, in_c, out_c):

conv_layer = nn.Sequential(

nn.Conv3d(in_c, out_c, kernel_size=(3, 3, 3), padding=0),

nn.LeakyReLU(),

nn.MaxPool3d((2, 2, 2)),

)

return conv_layer

def forward(self, x):

# Set 1

out = self.conv_layer1(x)

out = self.conv_layer2(out)

out = out.view(out.size(0), -1)

out = self.fc1(out)

out = self.relu(out)

out = self.batch(out)

out = self.drop(out)

out = F.softmax(out, dim=1)

return out

# Create CNN

model = CNNModel()

model.cuda() #pour utiliser GPU

print(model)

# Cross Entropy Loss

for param in model.parameters():

param.requires_grad = True

error = nn.CrossEntropyLoss()

# SGD Optimizer

learning_rate = 0.001

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

###################################################accuracy function ##################################

def accuracyCalc (predicted, targets):

correct = 0

p = predicted.tolist()

t = targets.flatten().tolist()

for i in range(len(p)):

if (p[i] == t[i]):

correct +=1

accuracy = 100 * correct / targets.shape[0]

return(accuracy)

#######################################################################################################

print(" build model --- %s seconds ---" % (time.time() - start_time))

#######################################################{{{{{{{training}}}}}}}##################################

print('data preparation ')

training_data = np.load("/content/drive/My Drive/brats6G/train/training_data.npy", allow_pickle=True)

training_data = training_data[:2]

targets = np.load("/content/drive/My Drive/brats6G/train/targets.npy", allow_pickle=True)

targets = targets[:2]

from sklearn.utils import shuffle

training_data, targets = shuffle(training_data, targets)

training_data = changechannel(training_data, 1, 5) #Channels ordering : first channel to ==> last channel'

training_data = resize3Dimages(training_data) #resize images

training_data = channel1to3(training_data,)#1 channel to 3 channel ===> RGB

training_data = changechannel(training_data, 4, 1)# last to first

#Definition of hyperparameters

num_epochs = 5

loss_list_train = []

accuracy_list_train = []

for epoch in range(num_epochs):

outputs = []

outputs= torch.tensor(outputs).cuda()

for fold in range(0, len(training_data), 4):

xtrain = training_data[fold : fold+4]

xtrain =torch.tensor(xtrain).float().cuda()

xtrain = xtrain.view(2, 3, 120, 120, 120)

# Clear gradients

optimizer.zero_grad()

# Forward propagation

v = model(xtrain)

outputs = torch.cat((outputs,v.detach()),dim=0)

targets = torch.Tensor(targets)

labels = targets.cuda()

outputs = torch.tensor(outputs, requires_grad=True)

_, predicted = torch.max(outputs, 1)

accuracy = accuracyCalc(predicted, targets)

labels = labels.long()

labels=labels.view(-1)

loss = nn.CrossEntropyLoss()

loss = loss(outputs, labels)

# Calculating gradients

loss.backward()

# Update parameters

optimizer.step()

loss_list_train.append(loss.data) #loss values loss

accuracy_list_train.append(accuracy/100)

np.save('/content/drive/My Drive/brats6G/accuracy_list_train.npy', np.array(accuracy_list_train))

np.save('/content/drive/My Drive/brats6G/loss_list_train.npy', np.array(loss_list_train))

print('Iteration: {}/{} Loss: {} Accuracy: {} %'.format(epoch+1, num_epochs, loss.data, accuracy))

print('Model training : Finished')