Hello Everone. I am working on 3D image reconstruction using a single image. Now

I have trained my model but it’s not working. Due to the lack of guidance in 3D, I can’t figure out the error. Please help me if there is some error in my approach to training.

First, let me explain what I am following.

1.I have taken synthetic dataset(I am uploading the mini dataset for 1 type of object per class).

Dataset-

https://drive.google.com/open?id=1AMjKQUujUlNSRRqnoKsJSteNed9mTEiI

2.I have taken 4 classes(objects) with 100 different types of objects per class.

- Each object is having 24 images where all these 24 images have one binvox file.

3.so I have associate each image with its 3D model separately.

For eg ->we have one chair class.Lets take a type of chair which have 24 images and one 3D model.

So I associate each of the image with 3D model.

4.Now I put them into traning with a batch size of 24 without shuffling.

5.Learning rate,loss is according to your paper.

6.after training to 50 epochs my average loss reduces from 11.92 to 4.76.

As I check after training, my model is outputting the same output for all the classes.

7.i have also used batch normalisation with Xavier initialisation.

8.after training to test I have taken argmax(returns indices of max value) along the depth or channel dimensions(dim=1).

My input shape-[400,24,128,128,3] to the model.

(Here 400 means 4 classes *100 different objects per class)

Output by model something like-[24,2,32,32,32]

After argmax -[24,32,32,32]

This is all I have done.

Please rectify me if I have done something wrong in preparation of dataset(or I have to extend my dataset by getting more images or augmentation). Or there is a different way to train these types of models. Or at argmax.

Here is the given model and training code–

n_epochs = 10

#model=model.cuda()

model.train()

train_loss = 0.0

for epoch in range(1, n_epochs+1):

print("number of epoch" ,epoch)

print("ALL ABOUT LOSS(training)--------",(train_loss/(500)),"----------/n")

train_loss = 0.0

model.train()

for i in range(len(arr)):

data=arr[i].cuda()

tar=arr_3d[i].cuda()

tar=tar.long()

optimizer.zero_grad()

output=model(data)

loss = criterion(output, tar)

loss.backward()

# perform a single optimization step (parameter update)

optimizer.step()

# update training loss

train_loss += loss.item()*data.size(0)

if(i%100==0 and i!=0):

scheduler.step()

print(scheduler.get_lr())

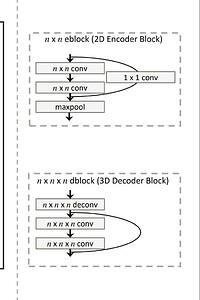

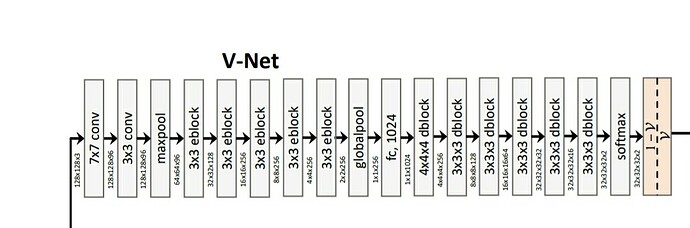

Model –