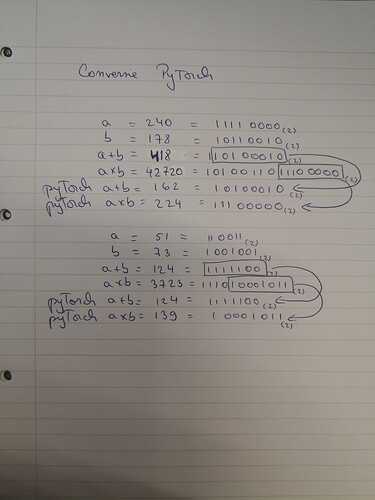

Hi, I am trying to understand how the PyTorch multiplications on 8 bits work when a model is quantized. Inside each convolution, do you do modulo 256 operations, which means keeping the LSB?

I tried to do simple ByteTensor operations and I saw that the LSBs are kept always. But I need to know if it is the same inside a quantized model.

Thanks a lot.