I noticed that there exists a difference on resNet architecture between caffe and pytorch. It’s about downsample operation:

in pytorch, downsample is executed at the end of each Bottleneck (if needed) as blow:

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False) self.bn1 = nn.BatchNorm2d(planes) self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(planes) self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False) self.bn3 = nn.BatchNorm2d(planes * 4) self.relu = nn.ReLU(inplace=True) self.downsample = downsample self.stride = stride

def forward(self, x): residual = x

out = self.conv1(x) out = self.bn1(out) out = self.relu(out)

out = self.conv2(out) out = self.bn2(out) out = self.relu(out)

out = self.conv3(out) out = self.bn3(out)

if self.downsample is not None: residual = self.downsample(x)

out += residual out = self.relu(out)

return out

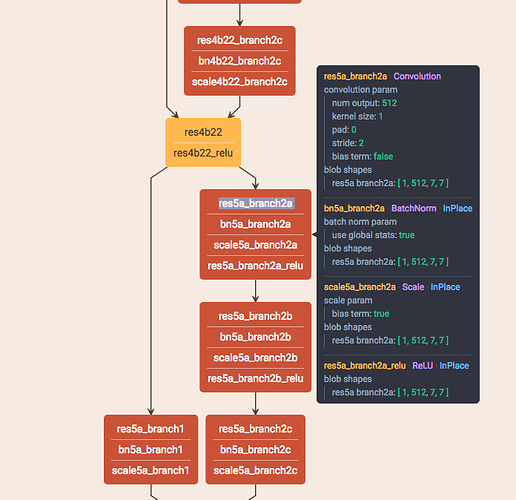

But in caffe prototxt, the position of downsample is at the top of each Botteleneck.

I am curious about the reason, and what will happen to this difference?