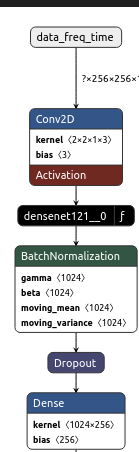

I am working on rebuilding some models from Tensorflow into PyTorch. The attached image is an example of such a model.

The data I’m using is pulsar time series data (256 x 256) not images. I have a couple of questions about doing this using Pytorch’s implementation of DenseNet (and other models as I have several different models I’m trying to re-create). I am fairly new to all of this so I apologize for any noob questions

-

Can I use Pytorch’s DenseNet on data other than images? Further, can I re-train/should I retrain or should I do adaptive training?

-

Does this snippet of code appear to be on the correct track for implementing the model pictured? If I do need to train the DenseNet

model would I have to add anything else to this or is it good enough to load the model without weights?

In this code cnn_layer would be densenet121

class _FreqBlock(nn.Module):

def __init__(self, model: string, cnn_layer: string) -> None:

super().__init__()

cnn_layer = model_params[model]["freq_cnn"]

units = model_params[model]["units"]

# Readjust the input size for the model to match our input

first_layer = [nn.Conv2d(in_channels=1, out_channels=3, kernel_size=(2,2), stride=(1,1), padding="valid", dilation=(1,1), bias=True),]

first_conv_layer.extend(list(model.features))

cnn_layer.features= nn.Sequential(*first_conv_layer)

self.cnn = torch.hub.load("pytorch/vision", cnn_layer, weights=None)

self.block = nn.Sequential(

nn.Conv2d(in_channels=1, out_channels=3, kernel_size=(2,2), stride=(1,1), padding="valid", dilation=(1,1), bias=True),

nn.ReLU(),

cnn_layer(), # Run the densenet121 model, will this train the model?

nn.BatchNorm2d(num_features=freq_units, eps=0.001, momentum=0.99),

nn.Dropout(p=0.3),

nn.Linear(in_features=freq_units,outfeatures=units),

)

def forward(self, dm: Tensor) -> Tensor:

return self.block(dm)