Hello fellow PyTorch folks,

I’ve put together a basic LR scheduler that works quite well for my use cases. I’m sharing it here in the hope that others might find it useful too.

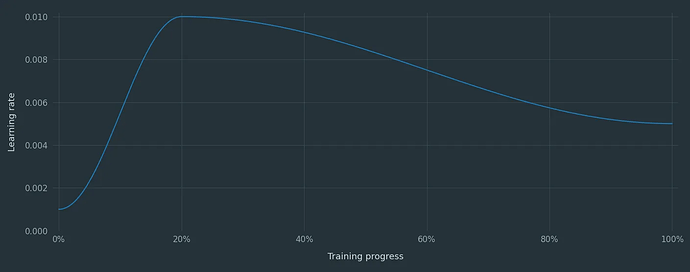

The idea is that you define a schedule with a set of frames and transitions, for example:

scheduler = KeyframeLR(

optimizer=my_optimizer,

frames=[

{"position": 0, "lr": 0.001},

{"transition": "cos"},

{"position": 0.2, "lr": 0.01},

{"transition": "cos"},

{"position": 1, "lr": 0.005},

],

end=max_steps,

)

You can have linear or cosine transitions, or pass a callable like so:

scheduler = KeyframeLR(

optimizer=my_optimizer,

units="steps",

frames=[

{"position": 0, "lr": 0.001},

{"position": 2_000, "lr": 0.01},

{"transition": lambda last_lr, *_: last_lr * 0.999},

],

end=10_000,

)

Units can be percentages, steps or even time.

The code is here if you’d like to take it for a spin.

At the moment it’s just a semi-polished experiment/personal utility so there isn’t any documentation, but I’ve written a short article that explains most aspects of it.

Feedback is most welcome!