Hi!

So I trained a U-Net model in adversarial setting with a Discriminator. The training and validation curves look fine. Just for context, I used L2 loss as the Generator loss and the BCE loss as Discriminator and the Adversarial loss.

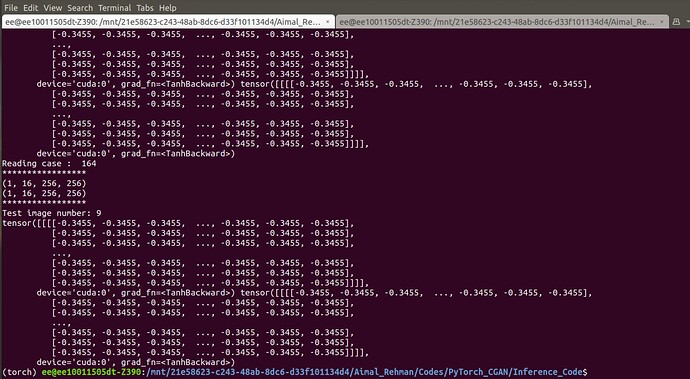

In the inference stage, I load this Pytorch model of the Generator and pass the (unseen) samples as input. Somehow, I am getting the same tensors as output against all samples. Am I missing something?

[Screenshot of output tensor attached]