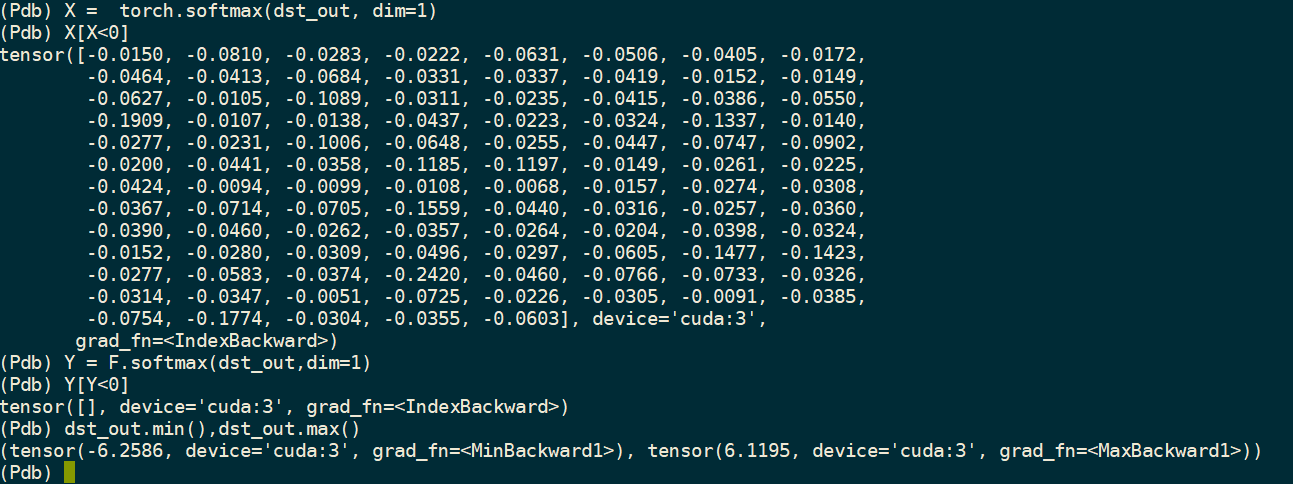

dst_out is the output.

After I got the NaN loss, I look through the intermediate status and found the negative values in softmax output.

Could anyone explain this?

There is no negative output after applying F.softmax, since Y[Y<0] returns an empty tensor.

dst_out would be the logits, which are not bounded to a specific range.

Thanks. But X is inconsistent with Y.

I don’t quite understand it. Could you explain what exactly is inconsistent?

Note that the softmax function will map values from [-inf, inf] to [0, 1].

When I perform X = torch.softmax(dst_out,dim=1), X contains negative values shown as line 2.

Ah, thanks for the pointer. I didn’t see the torch.softmax in the first line.

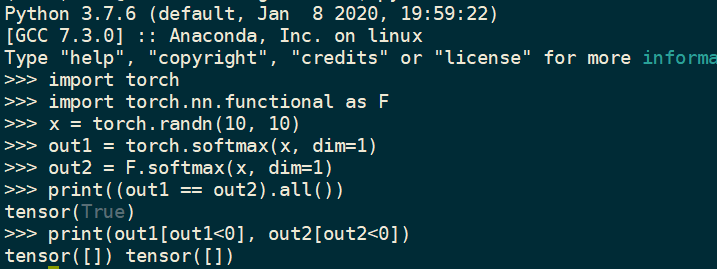

Which PyTorch version are you using, as I cannot reproduce this issue with the latest nightly binary:

x = torch.randn(10, 10)

out1 = torch.softmax(x, dim=1)

out2 = F.softmax(x, dim=1)

print((out1 == out2).all())

> True

print(out1[out1<0], out2[out2<0])

> tensor([]) tensor([])

That’s really strange indeed.

Could you try to add assert statements to your original code and check for negative outputs again?

PS: you can post code snippets by wrapping them into three backticks ```, which makes debugging easier.

The torch vision is 1.5.0. By adding the assert statement, it hits the point.

By the way, it works when I change “torch.softmax(…)” to “torch.log_softmax(…).exp()” .

Could you update to 1.6 and rerun the code?