I set requires_grad=False for some parameters, and their grad is zero. However, when I run optim.step(), those parameters still update their values.

This is my code

for p in self.G.enc_layers.parameters():

requires_grad_flag = p.requires_grad

p.requires_grad = False

z_rec = self.G(img_fake, mode='enc')

gzr_loss = F.mse_loss(z_rec[-1], zs_a[-1])

import pdb

pdb.set_trace()

# the problem appears in the following three lines

self.optim_G.zero_grad()

(self.lambda_gzr * gzr_loss).backward(retain_graph=True)

self.optim_G.step()

for p in self.G.enc_layers.parameters():

p.requires_grad = requires_grad_flag

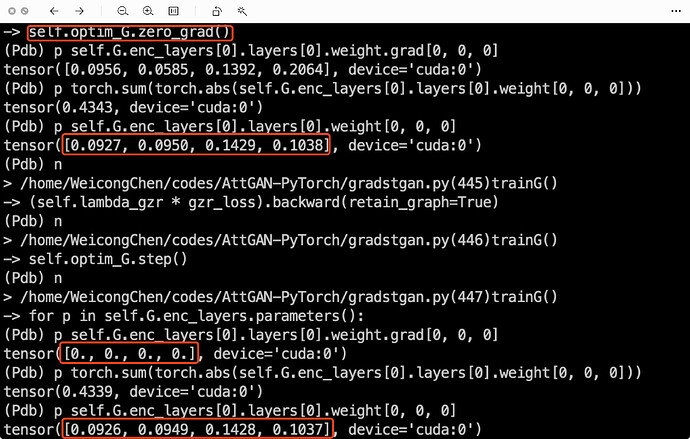

The picture below shows the values during debug.