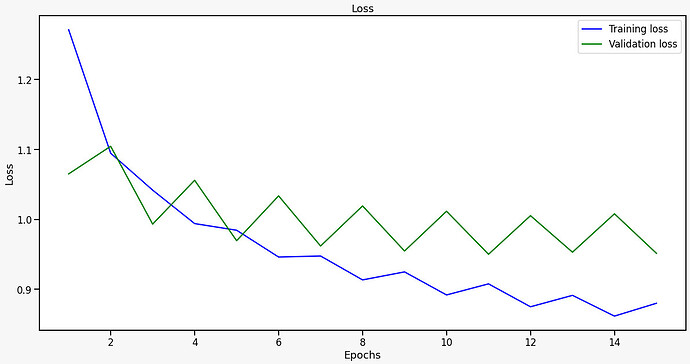

I used cosine annealing on my transformer network and got this curve

I have used Cross Entropy as my loss function and this is my parameters for the scheduler with max lr = 1e-3

sch = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, len(train_data) , eta_min=0, last_epoch=-1)

And this is my training code, what can i do to improve this loss curve?

for epoch in tqdm(range(epochs)):

model.train()

epoch_loss = 0

for batch in tqdm(train_iterator):

src_inp = batch['src_sentence'].permute(1,0).cuda()

trg_inp = batch['trg_sentence'].permute(1,0).cuda()

in_tgt = trg_inp[:-1, :]

exp_tgt = trg_inp[1:, :]

optimizer.zero_grad()

output= model(src_inp, in_tgt)

output = output.view(-1, output.shape[-1])

trg = exp_tgt.reshape(-1)

loss = criterion1(output, trg)

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), 0.25)

for p in model.parameters():

p.data.add_(-lr, p.grad)

optimizer.step()

sch.step()

epoch_loss += loss.item()

y.append(epoch_loss/len(train_iterator))

epoch_loss = 0

model.eval()

for batch in tqdm(val_iterator):

src_inp = batch['src_sentence'].permute(1,0).cuda()

trg_inp = batch['trg_sentence'].permute(1,0).cuda()

in_tgt = trg_inp[:-1, :]

exp_tgt = trg_inp[1:, :]

optimizer.zero_grad()

output= model(src_inp, in_tgt)

output = output.view(-1, output.shape[-1])

trg = exp_tgt.reshape(-1)

loss = criterion1(output, trg)

epoch_loss += loss.item()

if(math.isnan(loss.item())):

print(src_inp)

y_val.append(epoch_loss/len(val_iterator))