Hello, guys!

I am incorporating copy mechanism into transformer model and use CrossEntropy Loss to do optimization.

Typically, I would use

loss_fn = torch.nn.NLLloss()

log_softmax = torch.nn.LogSoftmax()

predictions = log_softmax(logits)

loss = loss_fn(predictions,targets)

but in copy mechanism: I have to calculate log probability myself by

probability = p_gen * generation_probability + (1-p_gen)*copy_probability

loss_fn = torch.nn.NLLloss()

loss = loss_fn(torch.log(probability),targets)

which means I can not use log_softmax which can bring numerical stability .

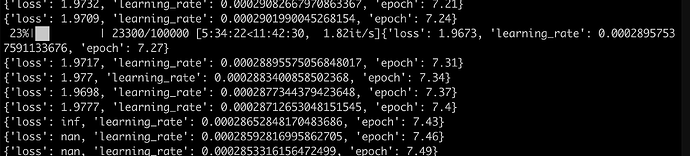

So my training process get nan problem , what should I do to avoid this?

BTW,i use fp16.