In my denoising work, I use torch.optim.lr_scheduler.StepLR to adjust learning rate. But it seems different between scheduler.step() and scheduler.step(ave_train_loss) that I called in each training epoch’s end.

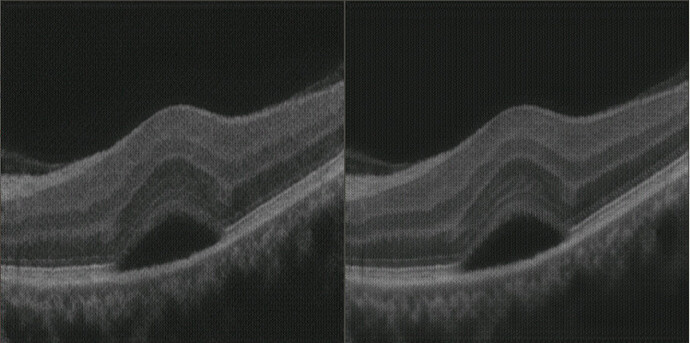

After training,I test both two models in same images, and get following result:

Figures in left and right are using

scheduler.step() and scheduler.step(ave_train_loss) respectively. I repeat several times to train and test in same situations, but get almost the same results.

I don’t find something interpretable about this question in source code. I think it should be no effect in StepLR scheduler when calling scheduler.step() with a parameter

class _LRScheduler(object):

...

def step(self, epoch=None):

# Raise a warning if old pattern is detected

# https://github.com/pytorch/pytorch/issues/20124

if self._step_count == 1:

if not hasattr(self.optimizer.step, "_with_counter"):

warnings.warn("Seems like `optimizer.step()` has been overridden after learning rate scheduler "

"initialization. Please, make sure to call `optimizer.step()` before "

"`lr_scheduler.step()`. See more details at "

"https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate", UserWarning)

# Just check if there were two first lr_scheduler.step() calls before optimizer.step()

elif self.optimizer._step_count < 1:

warnings.warn("Detected call of `lr_scheduler.step()` before `optimizer.step()`. "

"In PyTorch 1.1.0 and later, you should call them in the opposite order: "

"`optimizer.step()` before `lr_scheduler.step()`. Failure to do this "

"will result in PyTorch skipping the first value of the learning rate schedule. "

"See more details at "

"https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate", UserWarning)

self._step_count += 1

main of my code is following

# optimizer and scheduler

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=50, gamma=0.5)

if train_mode:

for epoch in range(1000):

ave_train_loss = 0

model.train()

for i, (image, label) in enumerate(train_loader):

optimizer.zero_grad()

image = image.to(device)

label = label.to(device)

output = model(image)

full_loss = critetion(output, label)

full_loss.backward()

optimizer.step()

ave_train_loss += image.size()[0] * full_loss.item()

ave_train_loss /= len(train_dataset)

scheduler.step(ave_train_loss)