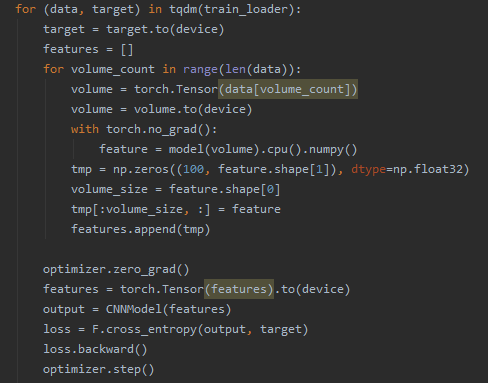

I tried to use Resnet50 to extract images’ feature and classify it by our own CNNNet.

I want to know whether totch.no_grad() makes optimizer.step() invalid during updating parameters of Resnet50.

If yes, how could I change it, because pycharm warns out of memory without torch.no_grad .

Indeed if you compute backpropagation you needs more memory. There is no issue about that. You may need to use a lighter network

so Resnet50 can’t be updated with torch.no_grad(), right?

no_grad disable autograd machinery. Nothing can be updated with torch no grad.

It is used to speed up evaluation and optimize memory usage in inference. For regular training you cannot use that and it won’t work as you have seen.

ty, it seems that I need to deal with memory