I am training a network with siamese structure, meaning that it has two inputs propagated through the same base network.

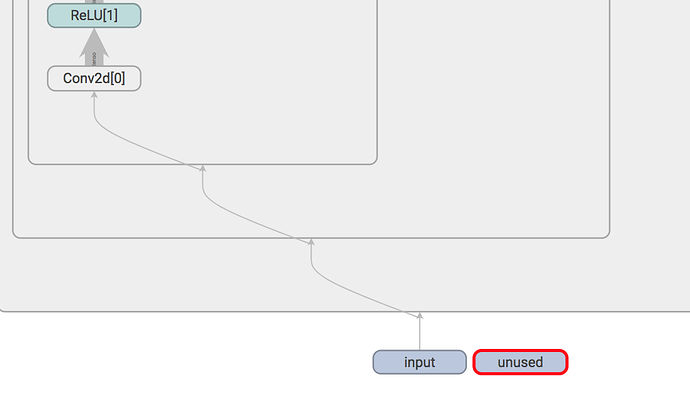

When I visualize the graph using tensorboardX, it looks like one input is used and the other is not:

I do not know, if this is an issue of tensorboardX storing maybe just the most recent graph or if this an error in my network.

I would like to obtain the graph during the forward pass of the first input, before the second input is forwarded.

I know that one can obtain the the predecessor in the graph using:

loss.grad_fn.next_functions[0][0].next_function[0][0]....next_function[0][0]

Is there a way to obtain the grad_fn attribute directly from one instance if nn.Module, like e.g. the Conv2d[0] in the picture? The weights are stored in Conv2d.weight but is there a Variablewhere the itermediate value of the forward propagation is stored?