Hi all,

The model that I’m trying to train doesn’t seem to increase it’s accuracy. I’m used to the MLPClassifier from scikit-learn because it’s what we used in our datascience classes, but I was hoping to use PyTorch to create more advanced models. I’m using the Skorch library wrapper to remove allot of boiler plate code.

Purpose of the model

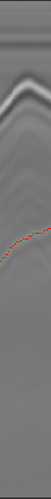

I’m working with radar data of road surfaces. In the data there are lots of echo’s of the road surface. The purpose of the model is to detect the actual road. When converting a slice of the data to an image it looks like this:

The red line is what Scikit’s MLPClassifier predicts and the green dots are the actual road surfaces which were manually input to train the dataset. With the MLPClassifier I was able to use the position as the target label. The MLPClassifier worked on a per-project basis, but got too slow when trying to combine all the projects together. I was hoping to use PyTorch which can use my GPU to create a more advanced model.

Data

The data consists of two files. The input file and the prediction/label file. I’ve already processed them so each row correlate to each other.

Input file

The input data is a CSV file where every row consists of 512 floating point numbers. The numbers range from roughly -5000 to 5000.

| 0 | 1 | … | 510 | 511 |

|---|---|---|---|---|

| 512.0 | 230.0 | -1836.0 | -1836.0 | |

| 512.0 | 225.0 | -1802.0 | -1802.0 |

Label file

This file consists of two numbers, but only the Y-column is used. The values in this column are used as the target label.

| X | Y |

|---|---|

| 1 | 211 |

| 3 | 212 |

Code

As I said earlier I’m using the Skorch library to remove allot of the boiler plate code. I have tried using PyTorch directly, but I had similar issues where each epoch didn’t change the accuracy. This makes me think either my model/layers are wrong or I’m making a mistake while providing the data to the model. The code I’m using looks like the following:

import torch

import torch.nn as nn

import pandas as pd

import skorch

import torch.nn.functional as F

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

# Read input data

data = pd.read_csv("project-input.csv", index_col=None)

X = data

# Read prediction data

predictions = pd.read_csv("project-predictions.csv", index_col=None)

y = predictions['Y']

# The model

class Multiclass(nn.Module):

def __init__(self, num_outputs=512):

super(Multiclass, self).__init__()

self.dropout = nn.Dropout(0.5)

self.hidden=nn.Linear(512, 2048)

self.output = nn.Linear(2048, 512)

def forward(self, x):

x = x.reshape(-1, self.hidden.in_features)

x = F.relu(self.hidden(x))

x = self.dropout(x)

x = F.softmax(self.output(x), dim=-1)

return x

net = skorch.classifier.NeuralNetClassifier(

module=Multiclass,

device=device

)

X = torch.tensor(X.values, dtype=torch.float32)

y = torch.tensor(y.values, dtype=torch.int64)

X = X.to(device)

y = y.to(device)

net.fit(X, y)

This runs, but the accuracy does not improve.

epoch train_loss valid_acc valid_loss dur

------- ------------ ----------- ------------ -------

1 6.2400 0.0008 6.2409 20.5180

2 6.2403 0.0008 6.2409 17.4280

3 6.2403 0.0008 6.2409 18.2130

4 6.2400 0.0008 6.2409 19.0680

5 6.2402 0.0008 6.2409 20.5232

I was hoping there is an obvious mistake that people see. As I said earlier I’m mostly familiar with the MLPClassifier, so the most I’ve previously messed around with was the hidden layers.