Hi there! I am trying to train the classifier that consists of 9 labels. This is split into train, val and test. The value counts for each label in the training data is mentioned below. When I train the model even with a deep layered neural network, it doesn’t seem to change much. I have used Resnet 18-152, VGG16, alexnet, but it doesn’t give a high accuracy. I also don’t understand why each epoch’s accuracy is unpredictable. More number of epochs doesn’t seem to bring a big change in the accuracy either. I have also tried tuning the parameters in the optimizer but it doesn’t help as well. I am creating a flask application using this model but I can’t continue without finding the issue.

Epoch 0/24

----------

train Loss: 1.9129 Acc: 0.2992

val Loss: 1.2543 Acc: 0.6109

Epoch 1/24

----------

train Loss: 1.7522 Acc: 0.3500

val Loss: 1.0652 Acc: 0.6610

Epoch 2/24

----------

train Loss: 1.6867 Acc: 0.3759

val Loss: 1.0986 Acc: 0.6471

Epoch 3/24

----------

train Loss: 1.6434 Acc: 0.3972

val Loss: 2.9469 Acc: 0.5304

Epoch 4/24

----------

train Loss: 1.6174 Acc: 0.3987

val Loss: 1.6511 Acc: 0.4928

Epoch 5/24

----------

train Loss: 1.5869 Acc: 0.4187

val Loss: 1.2147 Acc: 0.6512

Epoch 6/24

----------

train Loss: 1.5569 Acc: 0.4254

val Loss: 1.1117 Acc: 0.6646

Epoch 7/24

----------

train Loss: 1.5612 Acc: 0.4304

val Loss: 0.9236 Acc: 0.6832

Epoch 8/24

----------

train Loss: 1.5131 Acc: 0.4396

val Loss: 1.0802 Acc: 0.6827

Epoch 9/24

----------

train Loss: 1.5151 Acc: 0.4396

val Loss: 1.0663 Acc: 0.6914

Epoch 10/24

----------

train Loss: 1.4907 Acc: 0.4433

val Loss: 0.9161 Acc: 0.6904

Epoch 11/24

----------

train Loss: 1.4973 Acc: 0.4532

val Loss: 1.3146 Acc: 0.6558

Epoch 12/24

----------

train Loss: 1.4802 Acc: 0.4537

val Loss: 1.0379 Acc: 0.6739

Epoch 13/24

----------

train Loss: 1.4678 Acc: 0.4531

val Loss: 1.1062 Acc: 0.6481

Epoch 14/24

----------

train Loss: 1.4542 Acc: 0.4602

val Loss: 1.0109 Acc: 0.6899

Epoch 15/24

----------

train Loss: 1.4302 Acc: 0.4687

val Loss: 0.8217 Acc: 0.7090

Epoch 16/24

----------

train Loss: 1.4087 Acc: 0.4782

val Loss: 0.9820 Acc: 0.6889

Epoch 17/24

----------

train Loss: 1.4056 Acc: 0.4847

val Loss: 1.0069 Acc: 0.6749

Epoch 18/24

----------

train Loss: 1.4144 Acc: 0.4670

val Loss: 0.8460 Acc: 0.7054

Epoch 19/24

----------

train Loss: 1.3993 Acc: 0.4872

val Loss: 0.9765 Acc: 0.6889

Epoch 20/24

----------

train Loss: 1.3896 Acc: 0.4889

val Loss: 0.8044 Acc: 0.7198

Epoch 21/24

----------

train Loss: 1.3591 Acc: 0.4913

val Loss: 0.8682 Acc: 0.6956

Epoch 22/24

----------

train Loss: 1.3473 Acc: 0.5065

val Loss: 0.8547 Acc: 0.7007

Epoch 23/24

----------

train Loss: 1.3593 Acc: 0.4918

val Loss: 0.9752 Acc: 0.6914

Epoch 24/24

----------

train Loss: 1.3354 Acc: 0.5024

val Loss: 0.8254 Acc: 0.7059

Training complete in 46m 18s

Best val Acc: 0.719814

Here is my classification report

precision recall f1-score support

AK 0.13 0.65 0.22 161

BCC 0.39 0.34 0.37 500

BKL 0.14 0.09 0.11 393

DF 1.00 0.10 0.18 50

SCC 0.13 0.24 0.17 98

VASC 0.60 0.48 0.53 73

melanoma 0.45 0.63 0.53 762

nevus 0.49 0.38 0.43 901

unknown 0.98 0.81 0.89 3126

accuracy 0.62 6064

macro avg 0.48 0.41 0.38 6064

weighted avg 0.70 0.62 0.64 6064

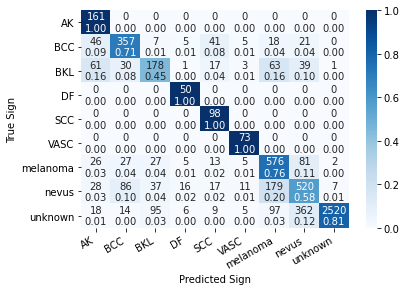

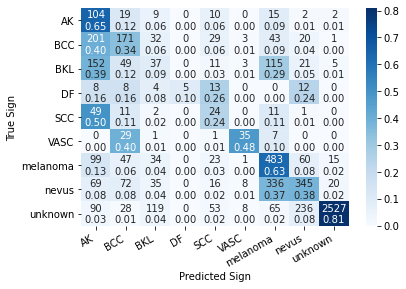

confusion matrix

value counts of each label in the training data

melanoma - 883

nevus = 889

bcc = 880

ak = 867

SCC = 628

unknown = 773

vasc = 253

bkl = 239

and here is my code

import numpy as np

import time

import copy

import os

import torch

import torch.optim as optim

import torch.nn as nn

import torchvision

import matplotlib.pyplot as plt

from torch.optim import lr_scheduler

from torchvision import datasets, models, transforms

import time

from tqdm import tqdm

import torch.nn.functional as F

from torch.nn.functional import relu

transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'test': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

}

# generate the dataset and the loader

data_dir = '../input/data-final/data'

# image dataset are the ones that help in getting the image folder and the augmentation happening

image_dataset = {x: datasets.ImageFolder(os.path.join(data_dir, x), transform=transforms[x]) for x in ['train', 'val', 'test']}

# shuffling and loading the data to become the torch loader

dataloaders = {x: torch.utils.data.DataLoader(image_dataset[x], batch_size = 16, shuffle = True, num_workers = 4) for x in ['train', 'val', 'test']}

# getting the class names

class_names = image_dataset['train'].classes

# size of the data

data_size = {x: len(image_dataset[x]) for x in ['train', 'val', 'test']}

device = torch.device('cuda')

# create a model

def create_model(n_classes):

model = models.resnet152(pretrained=True)

n_features = model.fc.in_features

model.fc = nn.Linear(n_features, n_classes)

return model.to(device)

base_model = create_model(n_classes = 9)

# Load the model (Download only at first time loading the model)

# Define the function to train the model

def train_model(model, criterion, optimizer, num_epochs=25):

since = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss = 0.0

running_corrects = 0

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

if phase == 'train':

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / data_size[phase]

epoch_acc = running_corrects.double() / data_size[phase]

print('{} Loss: {:.4f} Acc: {:.4f}'.format(

phase, epoch_loss, epoch_acc))

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(

time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

model.load_state_dict(best_model_wts)

return model

# Set the requires_grad on each parameter to false,

# so it will not calculate the gradients

# Set the new final layer for our new dataset

# Enable GPU for the model

# Set the loss function

criterion = nn.CrossEntropyLoss()

# Set the optimizer and the scheduler to update the weights, and train the model.

optimizer_conv = optim.Adam(base_model.parameters(), lr=0.001)

model_resnet = train_model(base_model, criterion, optimizer_conv, num_epochs=25)

Any support is appreciated.