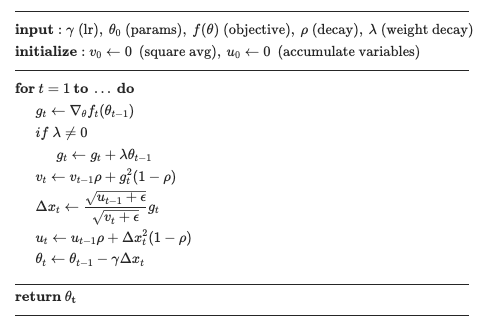

This is an image from Torch’s documentation…!

Adadelta’s original paper completely removed learning rate and uses RMS( Parameter updates ) instead . But torch’s implementation has an additional learning rate added to it… Any particular reason why this was done?