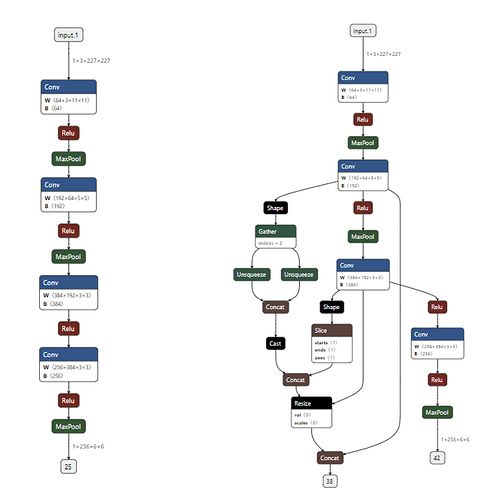

I have a (example) model shown in the left of a picture. The model is already trained, and I have a model_state_dict file.

I actully would like to add new layers to this trained model, which is shown in the right of the picture, where I added new output by concatinating two different features by scaling the dimension of the second feartures.

if I write code, which is something like

def forward(self, x: torch.Tensor) -> torch.Tensor:

x = self.features1(x)

out1 = x

scale= out1.shape[2] # take a scaling factor

x = self.features2(x)

out2 = F.interpolate(x, (scale), mode='nearest') # scale the size of features

outA = torch.cat([out1, out2], dim=1) # concat two kinds of features

x = self.features3(x)

#return x

return x, outA

So, I’d like to prepare the new model (right one) without any training. I guess that the new layers in the model do not include any new trainable paramters, where the scaling parameter is required, which is already determined by the dimentions of the features.

So, I guess that the model_state_dict file trained with the former model (left one) could be loaded into the new model although the name of the layer must be modified properly.

Then, I guess that the model could get availble.

Is this correct? Are there any useful information on this kind of transformation/displacement?