Thank you! It works.

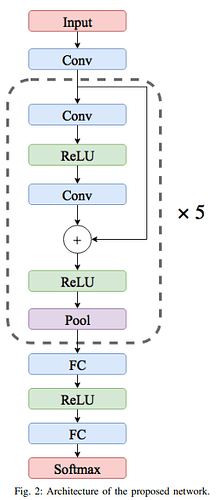

Some advices for those who are trying to reproduce https://arxiv.org/abs/1805.00794:

- be careful with the lr_scheduler, it must be reset after each training epoch. The steps must be performed each 10k training samples.

- the softmax after the second fully connected layer is not required.

This is the code to build the NN as described in the paper. Hope this helps!

import torch

import torch.nn as nn

import torch.nn.functional as F

class ECG_CNN(nn.Module):

def __init__(self, num_classes=5):

super(ECG_CNN, self).__init__()

self.conv1 = nn.Conv1d(1, 32, kernel_size=5)

self.res1 = ResidualBlock(32, 32, kernel_size=5, stride=2, padding=2)

self.res2 = ResidualBlock(32, 32, kernel_size=5, stride=2, padding=2)

self.res3 = ResidualBlock(32, 32, kernel_size=5, stride=2, padding=2)

self.res4 = ResidualBlock(32, 32, kernel_size=5, stride=2, padding=2)

self.res5 = ResidualBlock(32, 32, kernel_size=5, stride=2, padding=2)

self.fc1 = nn.Linear(32*2, 32)

self.fc2 = nn.Linear(32, num_classes)

def forward(self, x):

out = self.conv1(x)

out = self.res1(out)

out = self.res2(out)

out = self.res3(out)

out = self.res4(out)

out = self.res5(out)

out = torch.flatten(out, 1)

out = F.relu(self.fc1(out))

out = self.fc2(out)

return out

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride, padding):

super(ResidualBlock, self).__init__()

self.conv1 = nn.Conv1d(in_channels, out_channels, kernel_size, padding=padding)

self.conv2 = nn.Conv1d(in_channels, out_channels, kernel_size, padding=padding)

self.max_pool = nn.MaxPool1d(kernel_size, stride=stride)

def forward(self, x):

residual = x

out = F.relu(self.conv1(x))

out = self.conv2(out)

out += residual

out = self.max_pool(F.relu(out))

return out