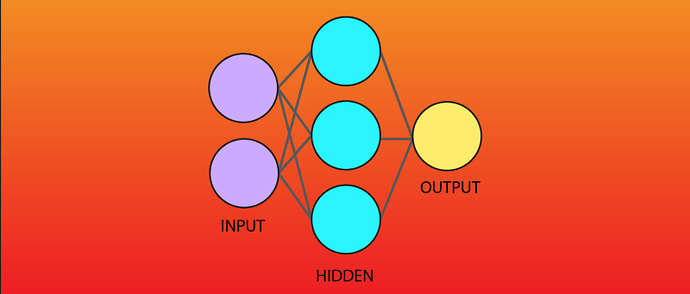

Using Resnet34 to calculate multi-classification problem I want to add 3x hidden layers on top of the Resnet.

To do this I defined a new function _hidden() to add the new hidden layers, but this approach is not working for me.

class Resnet4Channel(nn.Module):

def __init__(self, encoder_depth=34, pretrained=True, num_classes=28):

super().__init__()

encoder = RESNET_ENCODERS[encoder_depth](pretrained=pretrained)

num_final_in = encoder.fc.in_features

encoder.fc = nn.Linear(num_final_in, num_classes)

self.num_classes = num_classes

# we initialize this conv to take in 4 channels instead of 3

# we keeping corresponding weights and initializing new weights with zeros

# this trick taken from https://www.kaggle.com/iafoss/pretrained-resnet34-with-rgby-0-460-public-lb

w = encoder.conv1.weight

self.conv1 = nn.Conv2d(4, 64, kernel_size=7, stride=2, padding=3,

bias=False)

self.conv1.weight = nn.Parameter(torch.cat((w,torch.zeros(64,1,7,7)),dim=1))

self.bn1 = encoder.bn1

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = encoder.layer1

self.layer2 = encoder.layer2

self.layer3 = encoder.layer3

self.layer4 = encoder.layer4

self.avgpool = encoder.avgpool

self.fc = encoder.fc

def _hidden():

n_in, n_h1,n_h2,n_h3, n_out = self.num_classes, 32, 64,64, self.num_classes

model = nn.Sequential(nn.Linear(n_in, n_h1),

nn.ReLU(),

nn.Linear(n_h1, n_h2),

nn.ReLU(),

nn.Linear(n_h2, n_h3),

nn.ReLU(),

nn.Linear(n_h3, n_out),

nn.Softmax())

return model

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

x = self._hidden(x)

return x