Hello,

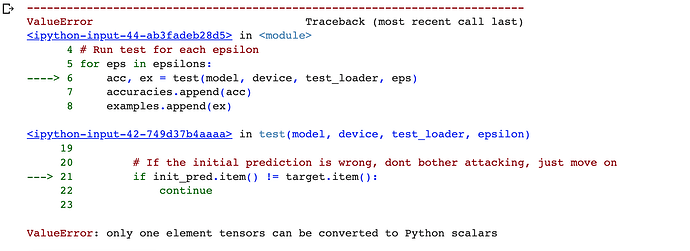

I tried to run adversarial attack on my test images. I trained my model that is a Resnet50. I copied the function for adversarial attack from pytorch.org. I received an error and I could not understand what is the problem.

Thanks

from __future__ import print_function

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

import numpy as np

import matplotlib.pyplot as plt

# NOTE: This is a hack to get around "User-agent" limitations when downloading MNIST datasets

# see, https://github.com/pytorch/vision/issues/3497 for more information

from six.moves import urllib

opener = urllib.request.build_opener()

opener.addheaders = [('User-agent', 'Mozilla/5.0')]

urllib.request.install_opener(opener)

epsilons = [0, .05, .1, .15, .2, .25, .3]

model = model.eval()

#FGSM attack code

def fgsm_attack(image, epsilon, data_grad):

# Collect the element-wise sign of the data gradient

sign_data_grad = data_grad.sign()

# Create the perturbed image by adjusting each pixel of the input image

perturbed_image = image + epsilon*sign_data_grad

# Adding clipping to maintain [0,1] range

perturbed_image = torch.clamp(perturbed_image, 0, 1)

# Return the perturbed image

return perturbed_image

def test( model, device, test_loader, epsilon ):

# Accuracy counter

correct = 0

adv_examples = []

# Loop over all examples in test set

for data, target in test_loader:

# Send the data and label to the device

data, target = data.to(device), target.to(device)

# Set requires_grad attribute of tensor. Important for Attack

data.requires_grad = True

# Forward pass the data through the model

output = model(data)

init_pred = output.max(1, keepdim=True)[1] # get the index of the max log-probability

# If the initial prediction is wrong, dont bother attacking, just move on

if init_pred.item() != target.item():

continue

# Calculate the loss

loss = F.nll_loss(output, target)

# Zero all existing gradients

model.zero_grad()

# Calculate gradients of model in backward pass

loss.backward()

# Collect datagrad

data_grad = data.grad.data

# Call FGSM Attack

perturbed_data = fgsm_attack(data, epsilon, data_grad)

# Re-classify the perturbed image

output = model(perturbed_data)

# Check for success

final_pred = output.max(1, keepdim=True)[1] # get the index of the max log-probability

if final_pred.item() == target.item():

correct += 1

# Special case for saving 0 epsilon examples

if (epsilon == 0) and (len(adv_examples) < 5):

adv_ex = perturbed_data.squeeze().detach().cpu().numpy()

adv_examples.append( (init_pred.item(), final_pred.item(), adv_ex) )

else:

# Save some adv examples for visualization later

if len(adv_examples) < 5:

adv_ex = perturbed_data.squeeze().detach().cpu().numpy()

adv_examples.append( (init_pred.item(), final_pred.item(), adv_ex) )

# Calculate final accuracy for this epsilon

final_acc = correct/float(len(test_loader))

print("Epsilon: {}\tTest Accuracy = {} / {} = {}".format(epsilon, correct, len(test_loader), final_acc))

# Return the accuracy and an adversarial example

return final_acc, adv_examples

accuracies = []

examples = []

# Run test for each epsilon

for eps in epsilons:

acc, ex = test(model, device, test_loader, eps)

accuracies.append(acc)

examples.append(ex)

The error is: