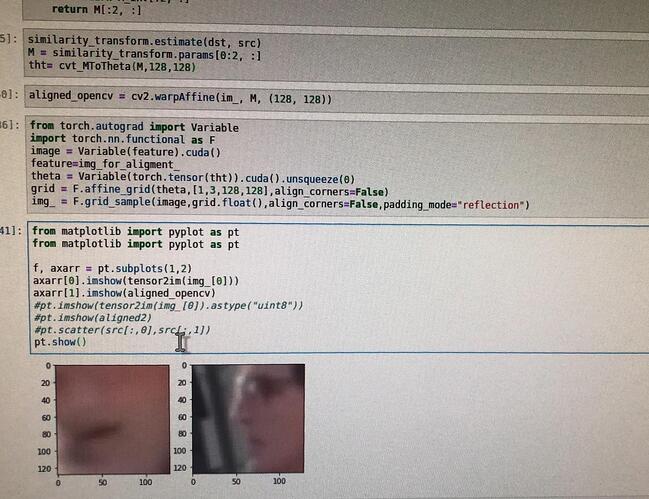

I tried this code for Alignment. It seems not the same as the output in opencv

but is F.affine_grid really expecting values in range [-1,1] or is F.grid_sample (which is typically called after affine_grid) expecting grids in this range?

Strictly speaking one, but F.grid_sample would pad for values outside of [-1, 1]. Unsure if I’ve mixed it up with affine_grind in a previous post.

I went down the rabbit hole in [1] and as far as I understand, the theta values (input to affine_grid) should also lie in [-1,1]. In one of my experiments where I learn the theta values, I noticed that F.grid_sample produced wild image transformations if the the thetas were unbounded which makes sense because theta and the [-1,1] linspace grid in grid_sample are matrix-multiplied (see [1]), potentially leading to many values outside of [-1,1] in the image projections. I bounded the thetas with a torch.tanh and the transformations now look decent. Have to complain though…the docstring of these two functions could really be improved in terms of what value ranges are expected.

[1] pytorch/AffineGridGenerator.cpp at master · pytorch/pytorch · GitHub

I don’t think this is right. Not sure what a negative scale factor (diagonal elements in 3x3 matrix) would even mean…