Hi all,

I want to rotate an image about a specific point. First I create the Transformation matrices for moving the center point to the origin, rotating and then moving back to the first point, then apply the transform using affine_grid and grid_sample functions. But the resulting image is not what it should be. Once I tested these parameters by applying them on the image using scipy and it works.

import torch.nn.functional as F

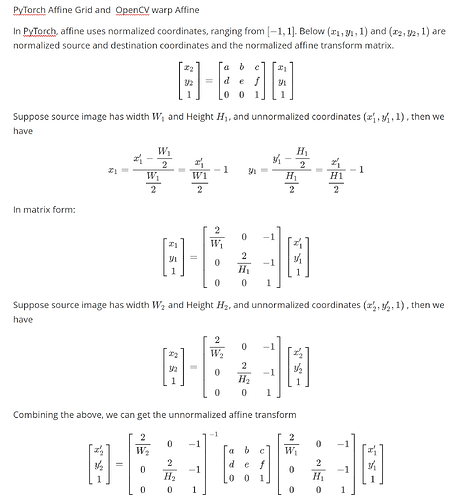

mat_move = torch.eye(3)

mat_move[0,2] = -x_center*2/my_image.shape[2]

mat_move[1,2] = -y_center*2/my_image.shape[3]

mat_rotate = torch.eye(3)

mat_rotate[0, 0] = cos_theta[0][0]

mat_rotate[0, 1] = -sin_theta[0][0]

mat_rotate[1, 0] = sin_theta[0][0]

mat_rotate[1, 1] = cos_theta[0][0]

mat_move_back = torch.eye(3)

mat_move_back[0,2] = x_center*2/my_image.shape[2]

mat_move_back[1,2] = y_center*2/my_image.shape[3]

rigid_transform = torch.mm(mat_move_back, torch.mm(mat_rotate, mat_move))

M = Variable(torch.zeros([1, 2, 3])).cuda()

M[0, 0, 0] = rigid_transform[0, 0]

M[0, 0, 1] = rigid_transform[0, 1]

M[0, 0, 2] = rigid_transform[0, 2]

M[0, 1, 0] = rigid_transform[1, 0]

M[0, 1, 1] = rigid_transform[1, 1]

M[0, 1, 2] = rigid_transform[1, 2]

grid = F.affine_grid(M, vertebrae.size())

vertebrae = F.grid_sample(vertebrae.float(), grid)

,

,