thank you Artilind.

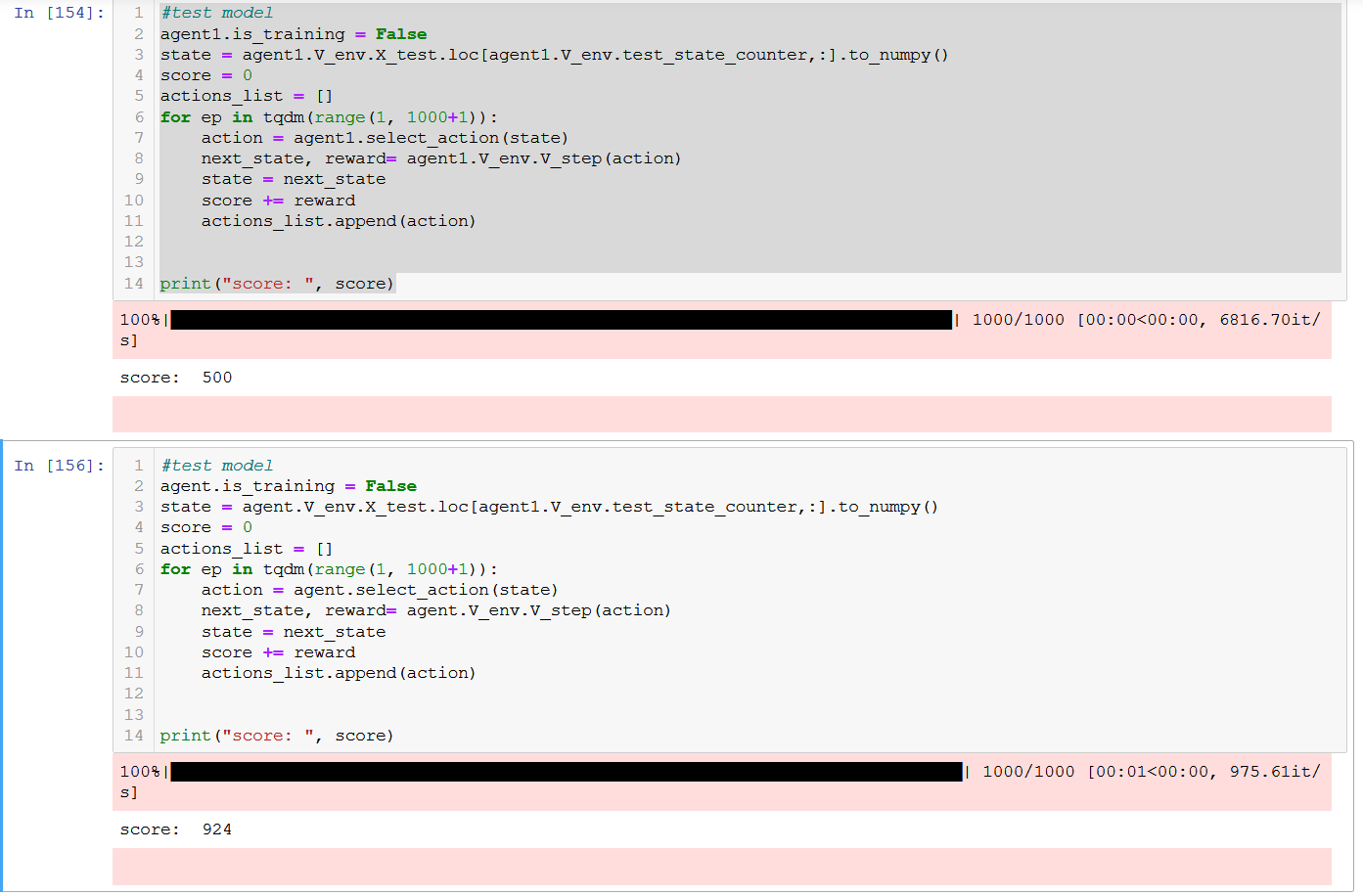

I checked the weight of both models and they are the same.

however when I use the same code to test both models on the same 1000 sample

the original one score 924/1000 correct answer

as the loaded model just 500/1000

agent.dqn.layers[-2].weight

agent1.dqn.layers[-2].weight

Parameter containing:

tensor([[-2.7366e-01, 1.9941e-01, -7.6141e-01, 1.4397e-01, -1.5718e-01,

-3.5489e-01, -4.0804e-01, -1.0615e-02, -1.5499e-02, 2.7892e-02,

-2.9361e-01, 3.0443e-01, -1.7186e-01, -2.7947e-01, -2.0401e-01,

2.3119e-02, 5.1811e-02, -2.5943e-01, -3.1975e-02, -2.3041e-02,

-2.8438e-02, -2.2843e-01, 4.4978e-02, 2.2255e-02, 4.0609e-02,

2.5109e-01, 1.1982e-01, 1.8197e-02, 7.7862e-01, -5.5143e-01,

-6.0077e-02, -7.6426e-01, -3.8195e-01, -7.4313e-01, -1.1739e-01,

5.3178e-02, 1.6637e-01, -9.6227e-01, 1.4221e-02, -1.8411e-01,

-1.0946e-01, -3.8608e-01, 5.6576e-02, 5.7527e-02, -9.9113e-02,

-2.0707e-01, -1.5844e-01, -5.2088e-03, -3.3738e-01, 3.4554e-02,

1.0015e-02, 1.5227e-02, -6.7776e-01, -3.7915e-02, -7.8485e-02,

4.5949e-02, -2.5784e-02, -3.3047e-01, 6.4348e-02, -1.5789e-01,

-1.2264e-02, -1.7147e-01, 7.6571e-02, -3.0366e-02],

[-2.1560e-01, -3.1336e-01, -2.1208e-02, 2.4466e-02, -1.1601e-01,

7.1008e-02, -8.4347e-02, -2.1182e-02, -4.9379e-01, -4.9651e-01,

6.4734e-03, -4.3814e-02, -4.6654e-01, 6.1255e-03, -1.4655e-02,

-1.8655e-01, -9.6411e-01, 1.1566e-02, 4.6149e-02, -1.1270e-01,

-3.3037e-02, -4.3250e-02, -2.2556e-01, -3.3957e-01, 4.7250e-03,

-4.1394e-01, -6.5579e-01, -4.6811e-02, -8.6857e-02, -5.4740e-01,

-9.8245e-01, 2.1111e-01, 1.5543e-01, -3.3294e-02, 2.6496e-01,

-4.8543e-01, -3.9470e-01, -4.6092e-02, -2.2453e-01, -4.8163e-01,

-1.9894e-01, 4.2989e-02, 3.3548e-02, 3.9954e-01, -3.8888e-02,

-4.7950e-01, -5.6876e-01, -4.5290e-01, -4.2524e-02, -5.1943e-02,

-4.1490e-01, -1.6104e-02, 6.7158e-04, -4.7883e-01, -1.5516e-01,

-4.5682e-02, -6.8973e-02, 6.2119e-02, -4.4249e-01, -8.8247e-01,

-5.6976e-02, -1.2550e-01, -5.3172e-01, -5.2279e-02]], device='cuda:0')

Parameter containing:

tensor([[-2.7366e-01, 1.9941e-01, -7.6141e-01, 1.4397e-01, -1.5718e-01,

-3.5489e-01, -4.0804e-01, -1.0615e-02, -1.5499e-02, 2.7892e-02,

-2.9361e-01, 3.0443e-01, -1.7186e-01, -2.7947e-01, -2.0401e-01,

2.3119e-02, 5.1811e-02, -2.5943e-01, -3.1975e-02, -2.3041e-02,

-2.8438e-02, -2.2843e-01, 4.4978e-02, 2.2255e-02, 4.0609e-02,

2.5109e-01, 1.1982e-01, 1.8197e-02, 7.7862e-01, -5.5143e-01,

-6.0077e-02, -7.6426e-01, -3.8195e-01, -7.4313e-01, -1.1739e-01,

5.3178e-02, 1.6637e-01, -9.6227e-01, 1.4221e-02, -1.8411e-01,

-1.0946e-01, -3.8608e-01, 5.6576e-02, 5.7527e-02, -9.9113e-02,

-2.0707e-01, -1.5844e-01, -5.2088e-03, -3.3738e-01, 3.4554e-02,

1.0015e-02, 1.5227e-02, -6.7776e-01, -3.7915e-02, -7.8485e-02,

4.5949e-02, -2.5784e-02, -3.3047e-01, 6.4348e-02, -1.5789e-01,

-1.2264e-02, -1.7147e-01, 7.6571e-02, -3.0366e-02],

[-2.1560e-01, -3.1336e-01, -2.1208e-02, 2.4466e-02, -1.1601e-01,

7.1008e-02, -8.4347e-02, -2.1182e-02, -4.9379e-01, -4.9651e-01,

6.4734e-03, -4.3814e-02, -4.6654e-01, 6.1255e-03, -1.4655e-02,

-1.8655e-01, -9.6411e-01, 1.1566e-02, 4.6149e-02, -1.1270e-01,

-3.3037e-02, -4.3250e-02, -2.2556e-01, -3.3957e-01, 4.7250e-03,

-4.1394e-01, -6.5579e-01, -4.6811e-02, -8.6857e-02, -5.4740e-01,

-9.8245e-01, 2.1111e-01, 1.5543e-01, -3.3294e-02, 2.6496e-01,

-4.8543e-01, -3.9470e-01, -4.6092e-02, -2.2453e-01, -4.8163e-01,

-1.9894e-01, 4.2989e-02, 3.3548e-02, 3.9954e-01, -3.8888e-02,

-4.7950e-01, -5.6876e-01, -4.5290e-01, -4.2524e-02, -5.1943e-02,

-4.1490e-01, -1.6104e-02, 6.7158e-04, -4.7883e-01, -1.5516e-01,

-4.5682e-02, -6.8973e-02, 6.2119e-02, -4.4249e-01, -8.8247e-01,

-5.6976e-02, -1.2550e-01, -5.3172e-01, -5.2279e-02]], device='cuda:0')