Hey guys,

I was implement a GAN network from online (followed by this github: GitHub - sxhxliang/BigGAN-pytorch: Pytorch implementation of LARGE SCALE GAN TRAINING FOR HIGH FIDELITY NATURAL IMAGE SYNTHESIS (BigGAN)).

The test file is missing so I wrote it by myself. The code ran successfully but the result didn’t show that the image is generated from learnt weights, but looks like generating from initial random noise. The code is as follows

model = Generator(z_dim,n_class,chn)

checkpoint = torch.load('./model/faces/241280_G.pth', map_location = str(device))

model.load_state_dict(checkpoint,strict=False)

model.to(device)

model.float()

model.eval()

def label_sampel():

label = torch.LongTensor(batch_size, 1).random_()%n_class

one_hot= torch.zeros(batch_size, n_class).scatter_(1, label, 1)

print(device)

return label.squeeze(1).to(device), one_hot.to(device)

z = torch.randn(batch_size, z_dim).to(device)

z_class, z_class_one_hot = label_sampel()

fake_images = model(z, z_class_one_hot)

save_image(denorm(fake_images.data), os.path.join(path, '1_generated.png'))

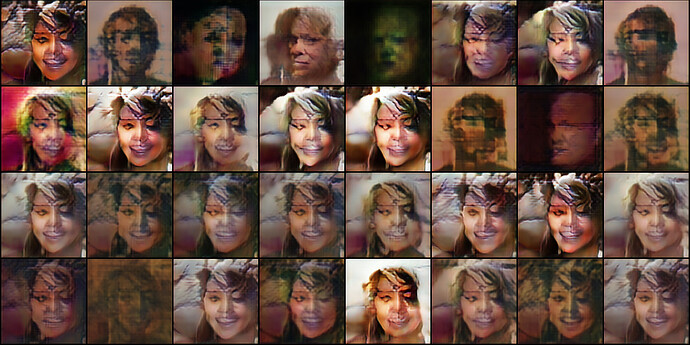

These are face image samples that generated during training phase.

This one is the image that I tried generate from trained generator via loading generator’s weights file.

Can some one help with it?

Thanks in advance.

Did the training run generate the first picture?

If so, could you store this random input tensor for the sake of debugging, and use it for the test case?

Hey!

Yeah, the first picture is the sample generating during training with batch size 32.

As you suggested, I reran the training and stored the input which is the random noise data corresponding to that iteration.

It worked when I redo everything.

And then I figured out the weight file were actually loaded. Inputs were fine too. When input went through the model build with weights, it didn’t generate a face-like image. I figured that something wrong with weight file.

What I did before is that I copied the weight file from a locked folder(has to use sudo to manipulate the folder) to another normal folder. And load weight file from there. I guess something got lost while I move the weight file. It worked if I keep them in original folder which created during initialization.

And I removed model.eval() but set requires_grad = False for input.

Hey, I think I’m wrong.

Actually the when I did it again, the training was finished. And the first time the training was interrupted and did complete.

I still don’t know exactly why is that. The code used torch.nn.DataParallel for both Generator and Discriminator during training. And I loaded the weights to gpu while testing.

How do I check if weights are loaded?