When I was training and validating the model, the output was all normal. After training, I called torch::save() to save the model to a .pt file, and then called torch::load() to load the model from the file to make predictions. At this time, the predicted value becomes NaN. I have checked the predicted network structure and network data type(Double), input data and its data type(Double), they are all correct. I have been troubled by this problem for a few days, please help me.

Network

struct NetImpl : torch::nn::Module {

NetImpl(int in_feature, int out_feature)

: fc1(in_feature, 10),

fc2(10, 100),

fc3(100, 500),

fc4(500, 250),

fc5(250, 125),

fc6(125, 70),

fc7(70, out_feature)

{

register_module("fc1", fc1);

register_module("fc2", fc2);

register_module("fc3", fc3);

register_module("fc4", fc4);

register_module("fc5", fc5);

register_module("fc6", fc6);

register_module("fc7", fc7);

}

torch::Tensor forward(torch::Tensor x) {

x = torch::leaky_relu(fc1->forward(x));

x = torch::leaky_relu(fc2->forward(x));

x = torch::leaky_relu(fc3->forward(x));

x = torch::leaky_relu(fc4->forward(x));

x = torch::leaky_relu(fc5->forward(x));

x = torch::leaky_relu(fc6->forward(x));

x = fc7->forward(x);

return x;

}

torch::nn::Linear fc1, fc2, fc3, fc4, fc5, fc6, fc7;

};

TORCH_MODULE(Net);

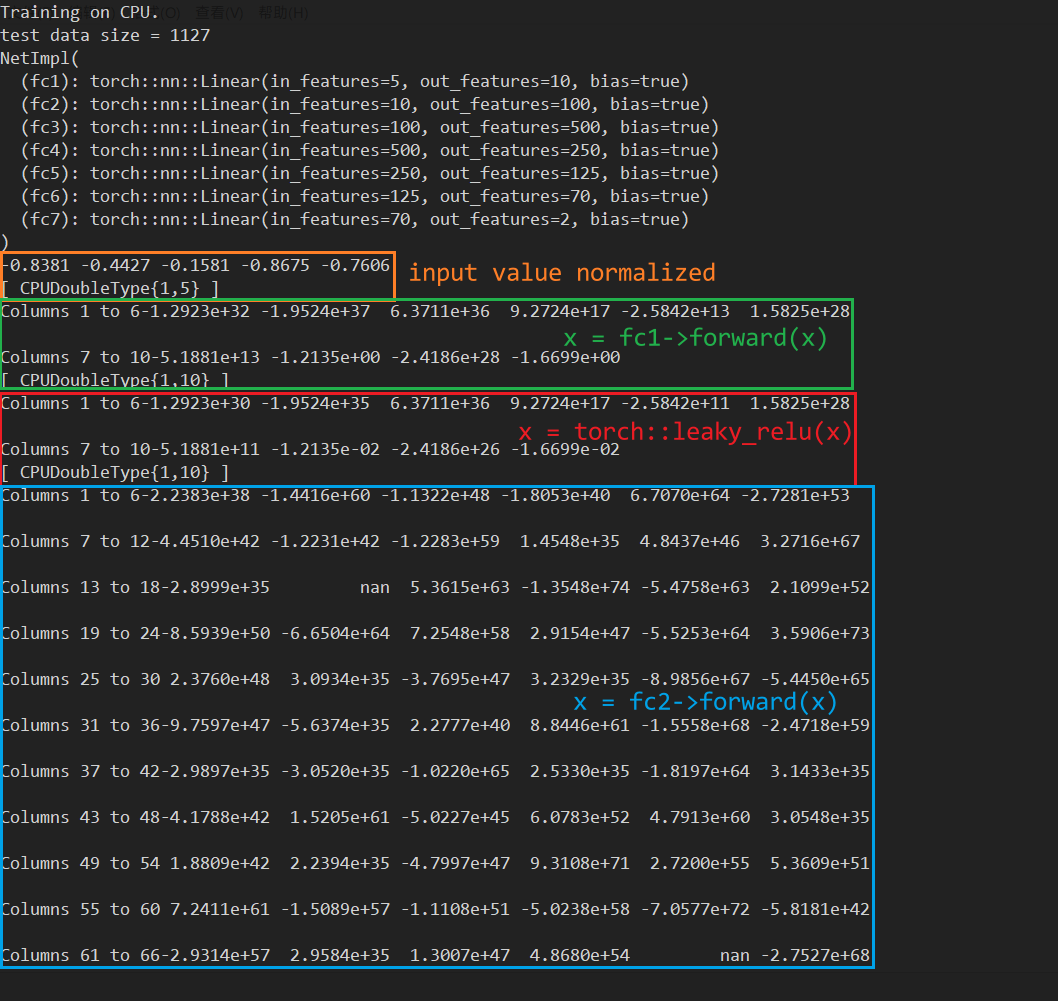

Save and load then got output as below

torch::save(model, model_path);

torch::load(model, model_path);

std::cout << model << std::endl;

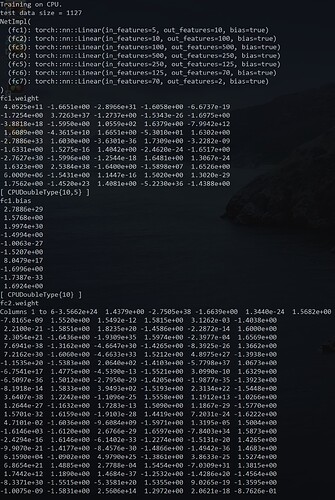

NetImpl(

(fc1): torch::nn::Linear(in_features=5, out_features=10, bias=true)

(fc2): torch::nn::Linear(in_features=10, out_features=100, bias=true)

(fc3): torch::nn::Linear(in_features=100, out_features=500, bias=true)

(fc4): torch::nn::Linear(in_features=500, out_features=250, bias=true)

(fc5): torch::nn::Linear(in_features=250, out_features=125, bias=true)

(fc6): torch::nn::Linear(in_features=125, out_features=70, bias=true)

(fc7): torch::nn::Linear(in_features=70, out_features=2, bias=true)

)

Predict Method

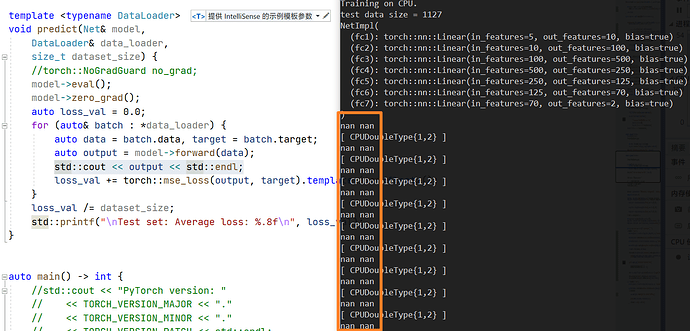

template <typename DataLoader>

void predict(Net& model,

DataLoader& data_loader,

size_t dataset_size) {

//torch::NoGradGuard no_grad;

model->eval();

model->zero_grad();

auto loss_val = 0.0;

for (auto& batch : *data_loader) {

auto data = batch.data, target = batch.target;

auto output = model->forward(data);

std::cout << output << std::endl;

loss_val += torch::mse_loss(output, target).template item<float>();

}

loss_val /= dataset_size;

std::printf("\nTest set: Average loss: %.8f\n", loss_val);

}

Result

Model File

If necessary, I will upload it to Google Drive.

Environment

libtorch stable 1.9.1

libtorch preview(nightly)

Microsoft Visual Studio 2019

Windows 10

Any suggestions and help are greatly appreciated!

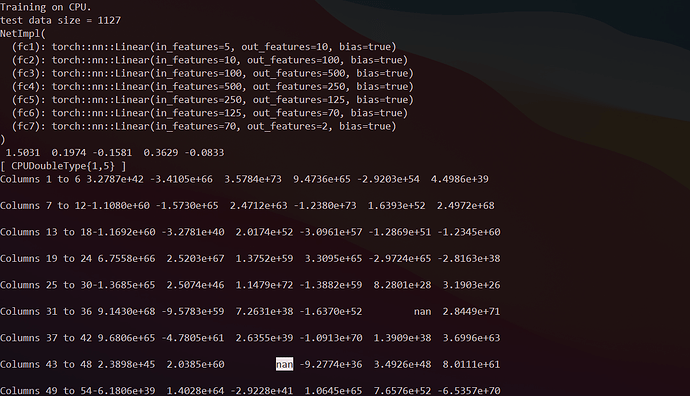

UPDATE

When the tensor performs the first few forward operations, the output value produces several NaNs.

x = torch::leaky_relu(fc2->forward(x));

std::cout << x << std::endl;

If tensor continue to forward, all subsequent outputs will be NaN. I think this may be the cause of the problem, but how should it be solved?